Over the past two years, fraud has changed shape. Instead of slow, isolated attempts, PSPs are now seeing bursts of high-speed activity, dozens of micro-transactions, rapid authentication retries, and card tests happening within seconds. This shift has made velocity one of the clearest signs of malicious intent. A single failed attempt tells you very little, but a sequence of twenty failed attempts in under a minute tells an entirely different story.

Velocity-based fraud is now common across card rails, open banking, A2A payments and digital wallets. Attackers use automated tools and bot frameworks to probe merchant systems at speeds that human reviewers simply cannot interpret as they unfold. As noted by Stripe, card-testing attacks increasingly involve tightly timed batches of low-value authorisation attempts designed to locate active cards before conducting real fraud [Stripe Card Testing Guide].For merchants, especially high-risk ones this shift requires new models for early detection. Traditional fraud filters that look only at individual transactions cannot understand the pattern behind an attack. That’s where fraud velocity and transaction sequence modelling come in. They allow PSPs to detect intent long before chargebacks, issuer complaints, or monitoring thresholds become a problem.

- Why Fraud Velocity Exploded Between 2024–2026

- Understanding the Mechanics: What Fraud Velocity Actually Measures

- Transaction Sequence Modelling: From Events to Patterns

- How PSPs Detect Card Testing, Bot Sweeps & Rapid-Fire Abuse

- High-Risk Verticals: Why They Are the First to Be Hit

- Cross-Rail Velocity: Cards, A2A, Wallets & Open Banking Attacks

- Behind the Scenes: How PSPs’ Graph, AI & Behavioural Engines Interpret Velocity

- Predictive Compliance 2026: The New Expectations

- Conclusion

- FAQs

Why Fraud Velocity Exploded Between 2024–2026

Fraud grew faster, more coordinated and more automated between 2024 and 2026 than in any previous period. Instead of attacking one payment type at a time, fraud rings now test cards, wallets, and open-banking payments simultaneously, switching rails instantly depending on where the friction appears. PSPs began reporting that fraud attempts were no longer hours apart they were seconds apart driven by tools designed to mimic human browsing behaviour and stagger attempts intelligently to avoid detection.

According to Feedzai, modern fraud engines increasingly observe “event sequences” rather than individual transactions, because automation enables attackers to operate with machine-like precision and speed across devices, networks and identities [Feedzai Sequence Modelling]. This shift in attack behaviour explains why velocity analytics became one of the most important detection layers.

Automation Became Accessible to Every Fraudster

By 2026, fraud-as-a-service platforms will make it easy to run high-speed attacks. What used to require technical skills now requires only a subscription to a bot platform. These systems can:

- Send dozens of card attempts per minute

- Rotate IPs, devices and fingerprints

- Escalate amounts based on partial successes

- Probe multiple rails in parallel

This is why PSPs no longer look at individual attempts, they look at the tempo behind them.

Multi-Rail Payments Created New Attack Surfaces

The shift to multi-rail checkout (cards, A2A, wallets, tokens) created a wider landscape for fraud testing. Attackers now exploit whichever rail appears weakest at that moment. As Adyen notes, abuse patterns often emerge only when you observe multiple channels together, not in isolation [Adyen Abuse Prevention].

A normal customer may switch rails due to convenience.

A fraudster switches rails due to friction analytics, testing each until they find the easiest path.

Instant Payment Systems Increased Attack Speed

The growth of real-time payments such as PIX, UPI and SEPA Instant allowed fraudsters to compress entire attack cycles into minutes. Instead of a slow sequence of retries, PSPs now see:

- 20-50 attempts within one minute

- Instant retry loops after errors

- Coordinated BIN cycling

- Rapid device switching to mask automation

This velocity is impossible for manual teams to assess in real time.

That’s why PSPs now rely heavily on velocity scoring and temporal sequence models to detect abnormal behaviour patterns.

Traditional Rules Couldn’t Keep Up

Rules like “block after three declines” or “flag mismatched IP addresses” fail in modern environments. Fraudsters know these rules. They randomise every fifth attempt, change devices mid-flow, or spread attempts across merchants to confuse issuer patterns.

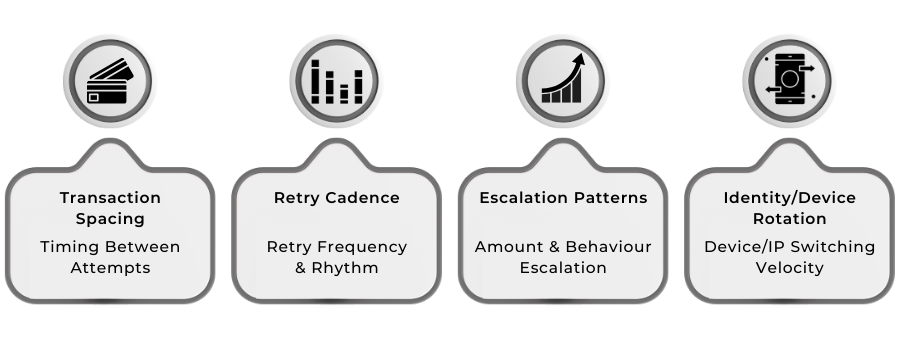

Velocity models, on the other hand, examine:

- Spacing between attempts

- Changing BINs over time

- Retry intervals

- Authentication rhythm

- Behavioural “pacing signatures”

This is how PSPs identify bots and testing scripts, even when the individual transactions appear clean.

Understanding the Mechanics: What Fraud Velocity Actually Measures

Fraud velocity is not just a count of how many transactions fail or succeed. It is a measurement of timing, frequency, and progression of the way transactions unfold over seconds or minutes. PSPs use velocity models to interpret how “normal” or “abnormal” the timing appears when compared with legitimate customer behaviour. A genuine buyer moves at a human rhythm; an automated script moves with engineered precision.

Understanding these mechanics is essential because modern fraud rarely appears suspicious in isolation. A single low-value test charge tells you nothing. Twenty similar attempts spaced at algorithmic intervals tell PSPs almost everything. This is why fraud velocity has become one of the strongest predictors of bot activity and card testing abuse.

Transaction Spacing and Timing Patterns

One of the first metrics PSPs evaluate is how quickly consecutive attempts occur.

For example:

- Normal users retry after moments of hesitation

- Bots retry instantly or in programmed micro-delays

Sift notes that velocity-based attacks often exhibit “time signatures” that are statistically improbable for human behaviour, even when bots attempt to mimic random pauses [Sift Fraud Blog].

The spacing, not the content, reveals the attacker.

Failure Sequences and Retry Cadence

Bots and testing scripts escalate their attempts in predictable ways. PSPs analyse:

- How failures cluster

- The order in which methods are retried

- How quickly the user switches between cards, devices or wallets

This cadence often forms a pattern:

fail → adjust → fail → adjust → succeed → scale.

Feedzai explains that these “temporal failure clusters” are a core signal in their fraud sequence models because they reveal the decision-making logic of automated tools [Feedzai Transaction Modelling].

Amount Escalation and Logical Progression

Card testers rarely jump from small to large amounts immediately. They follow controlled progression:

- Micro-charge

- Slightly higher test

- Moderate value

- Final usable transaction

The escalation pattern itself is a velocity signal.

BIN Cycling and Card-Range Progression

Fraud rings test cards in a structured order, often moving through entire BIN ranges.

PSPs watch:

- How fast does the BIN change

- Whether the pattern matches known testing scripts

- Whether the progression follows issuer-specific behaviour

This sequence gives away the automation behind the attack.

Device, IP and Geolocation Rotation

Velocity isn’t only about payment attempts; it also includes the speed of environmental changes:

- Device fingerprints switch every few seconds

- Rapid IP rotation across hosting providers

- Geo-hops between distant countries during a short session

As Arkose Labs reports, bots often use “orchestrated identity rotation,” which looks random individually but is patterned when viewed over time.

These switches are designed to bypass static rules but expose themselves through velocity analysis.

Authentication and Tokenisation Rhythm

In card, wallet and open banking flows, the timing of authentication attempts tells PSPs more than the attempts themselves. Examples include:

- Repeated 3DS challenges were executed too quickly

- Token refresh intervals that form repeating patterns

Even failed attempts have value; the rhythm behind them becomes the signal.

Why This Matters

Fraud velocity is powerful because it identifies intent early. A transaction can look perfect, but the sequence of events that led to it may expose an automated attack underway. PSPs rely on these mechanics to detect fraud before it impacts approval rates, triggers scheme monitoring, or leads to costly chargebacks.

Transaction Sequence Modelling: From Events to Patterns

Traditional fraud systems examined transactions individually, focusing on mismatches or risk attributes within a single attempt. But in 2026, fraud rarely reveals itself through one event. Instead, it emerges through the pattern formed by a group of events, their timing, progression, and behavioural consistency. Transaction sequence modelling gives PSPs a way to interpret these patterns, uncovering automated attacks that would look harmless if reviewed one attempt at a time.

Regulators have increasingly emphasised this shift. The U.S. Cybersecurity and Infrastructure Security Agency (CISA) notes that automated attacks, including credential-stuffing and bot-driven payment abuse, often appear benign until analysed “across a sequence of closely timed actions” rather than individually. This mirrors the approach PSPs now apply to transaction flows.

How Sequences Reveal the “Story” Behind Attempts

Every fraud attack tells a story when viewed over time. Transactions create a timeline: small-value tests, retries, device switches, authentication failures, and escalation in amounts. PSPs use machine learning to understand:

- What happened first

- What changed between attempts

- How quickly can behaviour adapt

- Whether the sequence aligns with known attack patterns

A single event may look normal. The sequence exposes the intent.

Recognising Escalation Patterns

Fraud rings almost always escalate. They test small amounts, increase values, adjust methods, and then expand volume once they find a weakness.

Common progression sequences include:

- low → medium → high amounts

- test BIN → fallback BIN → target BIN

- card → wallet → open banking → retry on card

- success → scale → distribute across merchants

The Federal Trade Commission (FTC) has repeatedly warned that these escalation sequences are core characteristics of card-testing networks and synthetic identity attacks, particularly when tied to automated retry patterns.

Detecting Linked Identities Through Time

Sequence modelling allows PSPs to detect when different identities are actually part of a single coordinated attack. Signals include:

- Repeated behaviour from different devices

- Identical timing patterns across separate accounts

These behaviours are subtle individually, but clear when plotted across a timeline.

NIST highlights that behavioural anomalies often become evident only when observing transactions “in relation to one another, rather than as isolated events” a core principle of sequence analysis.

Understanding Replay and Retry Logic

Fraudsters often use scripts that automatically retry declining transactions, replicate earlier successful payloads, or replay authentication signatures. Sequence models help PSPs analyse:

- Retry frequency

- Retry spacing

- Method switching logic

- Cross-rail transitions

These details enable PSPs to differentiate between normal customer friction and the logic of an automated testing script.

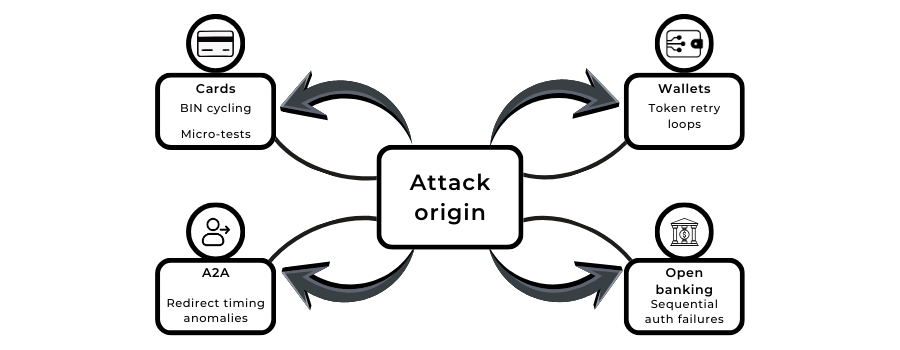

Cross-Rail Coordination Patterns

Modern fraud attacks rarely stick to one rail. PSPs frequently see sequences that move between:

- Cards

- A2A payments

- Wallets

- Open banking redirects

This behaviour becomes clear through timeline analysis. Europol’s cybercrime reporting has highlighted that organised fraud groups increasingly use “multi-vector probing sequences” across payment flows when attempting to test system integrity.

Why Sequence Modelling Matters

Sequence modelling allows PSPs to recognise intent, not just anomalies.

Whether the attack is a botnet probing a checkout form, a fraud ring testing BIN ranges, or a coordinated refund abuse run, the order and timing of events reveal the attack much earlier than traditional fraud scoring.

How PSPs Detect Card Testing, Bot Sweeps & Rapid-Fire Abuse

PSPs in 2026 rely on layered detection models that interpret not only the content of a transaction, but also the behaviour surrounding it. Card testing, bot sweeps and rapid-fire abuse all share the same underlying characteristic: they create sequences that unfold too quickly, too consistently and too mechanically to resemble human behaviour. PSPs use timing analysis, behavioural clustering and identity graphing to spot these patterns far earlier than traditional fraud rules ever could.

These detection capabilities have become increasingly important as automated attacks have grown more coordinated. Regulators such as the UK’s Financial Conduct Authority (FCA) emphasise the need for firms to maintain “continuous behavioural monitoring” when evaluating payment risk, noting that early-stage anomalies often precede unauthorised activity.

Detecting Card Testing Through Failure Patterns

Card testing is recognised by a specific rhythm: small-value transactions, escalating amounts, rapid retries and systematic BIN cycling. PSPs look for:

- Multiple low-value tests within short intervals

- Repeated failures with slight data variations

- Amount escalation in predictable steps

- Card brand switching after consistent declines

Individually, these attempts appear harmless.

Collectively, they expose a structured testing script at work.

Identifying Bot Sweeps Through Timing Irregularities

Bots often try to mimic human interaction by mixing delays, rotating devices, or switching browser types. But they struggle to hide their timing precision. PSPs detect bot sweeps by analysing:

- Micro-bursts of attempts spaced milliseconds apart

- Perfectly even intervals between retries

- Synchronised actions across multiple accounts

- Repeated patterns of drop-off at the same stage

These signatures reveal automation regardless of how well the bot disguises itself.

Recognising Rapid-Fire Abuse Across Multiple Rails

Fraud in 2026 is no longer tied to a single payment method. Attackers test multiple rails cards, wallets, A2A and open banking within the same time window. PSPs map these behaviours across a timeline to identify:

- Method switching sequences

- Authentication retry loops

- Redirects that repeat too quickly to be user-driven

- Cross-rail probing that escalates after partial successes

This multi-rail view is essential because some attacks only reveal themselves when the behaviour across all rails is analysed together.

Device and Identity Graph Anomalies

Modern PSPs maintain identity graphs that link devices, IPs, behavioural signals and previous outcomes across millions of transactions. When attackers rotate identities too quickly, the system notices. Common red flags include:

- Multiple accounts using the same device within seconds

- Devices appearing from distant regions in rapid sequence

- IPs switching between hosting providers in tight intervals

These patterns indicate orchestration rather than organic user behaviour.

Authentication Velocity as a Core Signal

Authentication attempts whether through 3DS, wallet biometrics or open banking redirect carry timing data that PSPs now rely on more than the authentication results themselves. PSPs watch for:

- Repeated 3DS failures within seconds

- Biometric retries that occur too fast for human response

- Open banking logins that attempt multiple banks in quick succession

These patterns often precede unauthorised access attempts.

Why PSP Detection Matters for Merchants

The faster PSPs can identify these signals, the sooner they can protect approval rates, reduce issuer friction and prevent merchants from entering scheme monitoring. When fraud velocity is caught early, merchants avoid the cascading impact of declines, chargebacks and reputational risk with their acquirers.

High-Risk Verticals: Why They Are the First to Be Hit

High-risk merchants face velocity-based fraud sooner and more aggressively than other sectors, largely because their environments make automated testing easier to conceal. These industries experience heavy traffic, fast onboarding, global user flows and multiple payment options all conditions that allow bot activity and card testing scripts to blend into everyday patterns.

Fraudsters also see high-risk verticals as efficient testing grounds. Before running attacks on mainstream brands, they often use these sectors to fine-tune timing, retry logic and BIN sequencing. This makes early detection particularly important for merchants operating in these categories.

Across the high-risk landscape, several factors make these businesses more vulnerable:

- High transaction frequency, which masks card-testing bursts

- Multiple payment rails, giving attackers more surfaces to probe

- Higher natural decline rates, making fraud harder to distinguish from real user friction

- Global customer bases, which attract identity and device switching

Industries like iGaming, travel, adult content, forex and CBD frequently experience attacks that appear harmless at first small failed attempts, quick retries or repeated authentications. But viewed in sequence, these behaviours form recognisable velocity patterns. PSPs now treat these sectors as early indicators of emerging attack methods, often updating detection models based on activity observed here before rolling improvements out to lower-risk categories.

For merchants in high-risk markets, the takeaway is simple: velocity detection isn’t just protective, it’s a competitive advantage. Identifying these early patterns helps reduce issuer friction, maintain acquirer trust and prevent the type of escalation that leads to scheme monitoring or reserve increases.

Cross-Rail Velocity: Cards, A2A, Wallets & Open Banking Attacks

As merchants adopt multiple payment rails, fraudsters have adapted their methods to move just as fluidly across them. In 2026, velocity attacks aren’t limited to card transactions; they span cards, A2A transfers, digital wallets, and open banking flows. Each rail has its own signals, rhythms and failure patterns, and fraudsters exploit these differences to identify which path gives them the least resistance.

Card velocity attacks remain the most common, especially for card testing. These patterns typically include low-value attempts made in tight intervals, rapid switching between BINs and repeated authentication failures with only slight variations. But fraud no longer stops there. Many fraud rings now probe A2A and open banking rails at the same time, attempting to exploit the timing of bank redirects or authentication steps. When these attempts happen too quickly, too consistently, or in unnatural order, PSPs recognise that the behaviour isn’t user-driven.

Digital wallets show their own form of velocity. Fraudsters run rapid biometric retries, token-refresh loops or device-switching patterns to identify when a wallet might bypass a failing card. In some cases, multiple instruments are tested from a single wallet within seconds, creating a pattern that genuine users rarely produce.

Across all these rails, the consistent theme is timing. While the data fields differ between card payments, A2A and wallets, the behaviour of automation remains visible when plotted over time. A shopper might retry a failed payment once or twice; a bot will retry across four rails, escalating values and rotating devices. By evaluating velocity across all rails rather than one, PSPs can detect early-stage fraud that would otherwise be invisible if examined in silo.

For merchants, cross-rail velocity matters because fraud rarely enters through a single door anymore. Attackers test the entire payment stack, moving quickly from one method to another. Detecting this movement early protects approval rates, reduces issuer friction and prevents fraud from spreading into more costly downstream outcomes.

Behind the Scenes: How PSPs’ Graph, AI & Behavioural Engines Interpret Velocity

Velocity signals only become meaningful when PSPs combine them with deeper behavioural and identity intelligence. Modern fraud engines don’t simply count attempts; they map relationships, compare patterns across merchants, and evaluate how each action fits into a broader behavioural history. This layered analysis allows PSPs to detect attacks within seconds, often before a single successful transaction occurs.

At the core of this capability is graph technology. Identity graphs link devices, cards, IP ranges, session behaviours, authentication attempts and historical outcomes across millions of transactions. When a botnet rotates devices or switches cards at high speed, the graph reveals common anchors, shared infrastructure, identical browser signatures, or timing patterns that appear across unrelated accounts. What looks normal in isolation becomes suspicious in context.

AI models sit on top of these graphs, evaluating the shape of behaviour rather than the fields within a transaction. Instead of asking “Is this card risky?” they ask, “Does this behaviour match the trajectory of previous fraud?” This allows PSPs to identify fraud even when the card numbers, devices and user attributes appear valid. For example, a payment may pass all standard checks but still be flagged because its timing pattern resembles known automation clusters.

Behavioural engines add another layer by analysing user interaction signals mouse movement, typing speed, scroll behaviour, latency variance, redirection timing and authentication dynamics. These systems can distinguish genuine indecision from machine-driven repetition. Even small behavioural anomalies, like identical typing intervals or perfectly symmetrical session timing, are strong indicators of automated scripts.

When these three components graphs, AI modelling, and behavioural analytics are combined, PSPs gain an exceptionally early view of emerging fraud. Many sophisticated attacks never reach the authorisation stage because the system recognises hostile sequencing, suspicious timing or cross-rail orchestration long before a genuine transaction would be expected to occur.

For merchants, the benefit is tangible. Issuer trust improves, false declines fall, and chargeback exposure drops significantly when PSPs can stop fraud at the behavioural level rather than waiting for financial signals. In high-risk environments, where patterns change quickly and attackers constantly evolve, this behind-the-scenes intelligence is often the difference between stable approval rates and escalating operational loss.

Predictive Compliance 2026: The New Expectations

Regulators are moving away from retrospective fraud reporting and toward models that require firms to anticipate threats before they materialise. Predictive compliance is becoming the new standard, where PSPs and merchants must demonstrate not only how fraud is detected, but also how behaviours are monitored, scored, and escalated in real time. By 2026, compliance teams are expected to understand behavioural anomalies, velocity indicators and cross-rail patterns with the same fluency they once applied to traditional AML checks.

Predictive oversight is now tied directly to risk controls. If a merchant cannot demonstrate early-warning capabilities, acquirers may introduce higher reserves, delay onboarding, or require enhanced transaction monitoring. This shift is especially pronounced for high-risk sectors, where velocity-based attacks frequently precede chargebacks, fraud events and scheme penalties.

Where Regulators Are Moving Next

Regulatory models increasingly favour systems that recognise patterns before financial harm occurs. Instead of waiting for fraud losses, compliance frameworks now emphasise:

- Continuous behavioural monitoring, not static rule checks

- Real-time anomaly detection, especially around timing and retries

- Link analysis, showing how devices, IPs and identities connect

- Documentation of predictive models, including escalation triggers

Regulators expect PSPs and merchants to justify why a pattern was detected and how it was actioned. Predictive controls are no longer optional; they are becoming a measurable compliance obligation.

What PSPs Expect Merchants to Demonstrate

PSPs now benchmark merchants based on how proactively they identify abnormal activity. The stronger a merchant’s predictive processes, the more confidence the PSP has in offering lower reserves and smoother approvals. In 2026, merchants must be able to show:

- Early detection of velocity clusters before chargebacks occur

- Documented incident patterns, including cross-rail behaviour

- Evidence of adaptive rules, not static filters

- Clear escalation paths when sequences resemble known attack models

- Real-time alerting, not end-of-day reconciliation

Strong predictive compliance not only helps with fraud mitigation it also signals operational maturity. PSPs increasingly factor these capabilities into underwriting reviews, ongoing monitoring and annual risk scoring.

When merchants can demonstrate that they understand and respond to behavioural patterns, they reduce the likelihood of entering scheme monitoring, facing funding delays or undergoing enhanced due diligence. Predictive compliance ultimately becomes a commercial advantage.

Conclusion

Fraud in 2026 is no longer defined by individual bad transactions; it is defined by the speed, structure and coordination behind them. Bot attacks, card testing, identity rotation and cross-rail probing all share one attribute: they reveal themselves through behaviour, not single data points. That is why velocity monitoring and sequence modelling have become essential tools not only for fraud prevention, but for maintaining issuer trust, reducing chargebacks and passing PSP compliance reviews.

Merchants who treat fraud as a pattern-recognition problem rather than a rule-based one gain a substantial advantage. They detect attacks earlier, avoid expensive operational disruptions and demonstrate to acquirers that they can manage risk proactively. In high-risk environments, this capability often determines whether a business maintains stable approvals or faces elevated reserves, scheme monitoring or onboarding friction.

As regulators shift toward predictive compliance, and as PSPs rely heavily on behavioural intelligence, merchants that invest in velocity analysis, cross-rail monitoring and sequence-driven fraud models will be better positioned for sustainable growth. Fraud prevention is no longer about reacting to losses. It is about recognising intention before a transaction ever settles, and in 2026, that is the difference between an exposed merchant and a resilient one.

FAQs

1. What is fraud velocity and why does it matter in 2026?

Fraud velocity refers to how quickly related transactions occur, how many attempts are made, how fast they are executed and how behaviour escalates over time. In 2026, PSPs use velocity signals to identify bot activity, card testing and automated abuse long before any successful transaction or chargeback occurs.

2. How is transaction sequence modelling different from traditional fraud checks?

Traditional fraud rules evaluate transactions individually. Sequence modelling looks at the order, timing and progression of events. Even if each attempt appears harmless, the pattern as a whole can reveal automation or coordinated fraud.

3. Why are high-risk merchants targeted first by velocity attacks?

High-risk sectors such as iGaming, travel, adult, trading and CBD process high traffic, low-value authorisations and global payments. These environments allow bots and testing scripts to blend into normal customer behaviour, making them ideal for early-stage attack testing.

4. Can fraud velocity be detected across multiple payment rails?

Yes. PSPs now monitor velocity across cards, digital wallets, A2A rails and open banking flows. Fraudsters often test several rails in the same attack, and cross-rail velocity reveals this movement earlier than rail-specific monitoring.

5. What signals indicate that an attack is bot-driven?

Common indicators include perfectly timed retries, rapid BIN cycling, identical session timing, microsecond-level spacing, device switching in patterns and repeated authentication failures that occur too quickly to be human-driven.

6. Are PSPs required to use predictive fraud detection?

While not always mandated explicitly, regulators increasingly expect PSPs to operate proactive monitoring systems. Predictive detection aligns with evolving FCA, EU, and international financial-crime expectations, helping reduce systemic fraud risk.

7. What does predictive compliance mean for merchants?

Predictive compliance means merchants must demonstrate that they can detect behavioural anomalies early, not just report fraud after it happens. PSPs now assess whether a merchant has early-warning capabilities, real-time escalation procedures and adaptive risk controls.

8. How does sequence modelling help reduce chargebacks?

Card testing and bot activity often precede large-scale fraud, which eventually creates chargebacks. Sequence modelling identifies early patterns, allowing PSPs and merchants to block attacks before they reach the point of unauthorised transactions or disputes.

9. Can high-risk merchants lower their reserves by improving fraud velocity controls?

Yes. PSPs reward merchants with strong predictive fraud capabilities this can lead to lower reserves, fewer funding delays and better approval rates. Strong velocity detection demonstrates operational maturity.

10. Do merchants need specialised tools, or do PSPs handle velocity monitoring?

PSPs provide core velocity and sequence detection, but merchants improve results significantly when they add their own session monitoring, device analytics and behavioural signals. Combined intelligence leads to more accurate risk scoring and fewer false declines.