Payment fraud is evolving faster than traditional decisioning systems can respond. As digital commerce expands across cards, A2A payments, mobile wallets and instant-payment networks, attackers are no longer relying on crude credential theft. Instead, they exploit behavioural blind spots, synthetic identities, device-switching patterns and cross-border transaction sequencing that can slip past static rule engines. By 2026, PSPs face rising pressure from merchants and regulators to detect fraud earlier, reduce false positives and stop chargebacks before they occur.

This pressure is amplified by new regulatory expectations. Under PSD3/PSR, European PSPs must demonstrate stronger authentication controls while minimising unnecessary friction. In the UK, the FCA pushes for more proactive scam detection, including risk-based SCA and real-time monitoring of high-risk behaviours.

APAC regulators such as the RBI and MAS are implementing similar real-time fraud-screening requirements across instant-payment systems like UPI and PayNow.

Against this backdrop, PSPs are moving beyond traditional fraud tools. Rule engines that once worked for predictable card-not-present patterns cannot keep up with today’s fluid, multi-rail payment landscape. Fraud behaviour shifts hourly, not quarterly. Issuers rely on richer signals to inform approval decisions. Merchants demand fewer false declines and more transparency. And high-risk industries expect PSPs to detect abuse and first-party misuse before authorisation, not after the chargeback arrives.

This is where transaction-level AI becomes essential. Instead of reviewing batches of transactions or relying on fixed rules, modern PSPs evaluate each transaction individually using predictive models trained on millions of behavioural, device and network signals. These models operate in real time, scoring risk at the exact moment of checkout, before SCA, before routing, and before any irreversible decline or chargeback occurs.

In 2026, this is not an upgrade. It is the new baseline. PSPs that cannot deliver accurate, dynamic transaction-level scoring risk higher fraud, lost volume, increased issuer friction and dissatisfied merchants. Those that adopt predictive AI gain a structural advantage, better detection, fewer false declines and stronger approval performance across every payment rail.

- The Rise of Transaction-Level AI: What It Actually Means

- The Data Science Behind Real-Time Transaction Scoring

- The Types of Predictive Fraud Models PSPs Now Rely On

- Predictive AI vs Legacy Rule Engines: Why Rules Fail in 2026

- How Transaction-Level AI Reduces Chargebacks

- False Declines: The Silent Revenue Killer & How AI Fixes It

- Real-Time AI Across Multi-Rail Payments (Cards, A2A, Instant, Wallets)

- Balancing Fraud Detection and Conversion: The PSP Trade-Off Model

- Regulatory Requirements Shaping PSP Fraud Models in 2026

- Regulatory Requirements Shaping PSP Fraud Models in 2026

- Case Studies: How Different Industries Apply Transaction-Level AI

- Case Study A: European FX Platform (Device Switching & High-Velocity Behaviour)

- Case Study B: Global Marketplace (Graph Intelligence for Buyer-Seller Networks)

- Case Study C: European iGaming Operator (First-Party Misuse Patterns)

- Case Study D: Subscription Platform (Reducing False Declines)

- Case Study E: LATAM PSP (Instant-Payment Fraud Patterns Across Alias Systems)

- Where AI Lives Inside the PSP Stack (Cascading Risk Rules + Orchestration Integration)

- The Merchant KPIs Most Impacted by Transaction-Level AI

- A Practical Roadmap for PSPs Deploying Transaction-Level AI

- The Future: Federated AI Models Across PSPs, Schemes and Acquirers

- Conclusion

- FAQs

The Rise of Transaction-Level AI: What It Actually Means

For many years, PSP fraud systems relied on two familiar pillars: static rules and post-transaction scoring. Rules handled predictable patterns mismatched IP and billing locations, repeated failed attempts, and abnormal basket size while offline scoring models processed transactions in batches to refine future decisions. This structure worked when fraud behaviour changed slowly. It does not work in 2026.

Fraud today evolves every hour. Fraud rings test thousands of micro-transactions across different BIN ranges. Device farms replicate user journeys at scale. Synthetic identities simulate legitimate patterns until the moment of exploitation. Attackers exploit orchestration blind spots by shifting between cards, open banking payments and instant-payment networks.

A rule set written last month or even last week can quickly become irrelevant.

Transaction-level AI changes this. Instead of relying on fixed logic, PSPs evaluate each transaction in real time using predictive models that compare the customer’s behaviour, device attributes, location signals and journey pattern against millions of previous data points. These models analyse how “normal” or “abnormal” the transaction appears relative to similar profiles, even when it is the first time a specific user appears in the system.

This approach is fundamentally different from legacy systems. Transaction-level AI does not apply a generic rule such as “block all high-velocity attempts.” It evaluates why the velocity looks abnormal, whether the behaviour aligns with known fraud clusters, and whether the device characteristics mirror patterns associated with bots or automated scripts. At the same time, the model assesses whether the customer’s behaviour resembles legitimate but unusual scenarios for example, a real user logging in from a new device while travelling.

For PSPs, the rise of transaction-level AI is also a response to merchant and regulatory pressure. Merchants want fewer chargebacks and fewer false declines. Regulators in Europe, the UK and APAC expect financial institutions to detect scams earlier and rely on risk-based authentication wherever appropriate. PSPs that continue using outdated rule engines cannot keep up with these requirements. Transaction-level AI provides a dynamic, scalable foundation for detecting fraud without sacrificing conversion.

This shift marks the beginning of a new era in fraud prevention one defined by prediction, flexibility and continuous adaptation instead of rigid thresholds and fixed rules.

The Data Science Behind Real-Time Transaction Scoring

Modern PSPs did not arrive at real-time fraud prediction overnight. What sits beneath today’s transaction-level AI is a blend of data science, statistical modelling and practical experience built from millions of payment journeys. At its core, real-time scoring is simply the ability to understand whether a transaction looks normal compared with everything the PSP has seen before and to make that judgment within a few milliseconds.

The scoring process begins long before the payment is submitted. PSPs collect behavioural and device signals from the moment a user lands on the checkout page: how quickly they type, whether their device is jailbroken, whether their location jumps unexpectedly, and whether the connection resembles patterns used by bots or farms. None of these signals means anything on its own. What matters is how they relate to one another. This is where the data science comes in.

Instead of creating fixed rules, PSPs train models to learn what legitimate customer behaviour looks like. The model identifies patterns across past approvals, past declines and confirmed fraud. Over time, it knows the difference between a genuine customer logging in from a new device and a fraudster attempting account takeover. These distinctions are subtle, often invisible to a rule engine which is why predictive models are now essential.

A significant part of real-time scoring is anomaly detection, a method that spots behaviour that falls outside expected patterns. This is especially useful in high-risk sectors where fraud attempts spike suddenly and unpredictably. If the pattern deviates from a device signature the PSP has never seen, or a login behaviour that resembles known bot sequences, the model reacts instantly.

Another layer is sequence modelling. Fraud is rarely a single event; it’s a sequence of actions leading to the payment attempt. Data science helps detect these patterns: how many attempts were made before this one, how quickly they occurred, whether the session behaviour changed halfway through, and whether it aligns with patterns linked to past fraud rings.

The important point is that none of this is happening in a vacuum. PSPs feed the models with continuous feedback from issuers, chargeback outcomes, merchant data, corridor-specific approval trends and SCA histories. This makes the models more representative, more adaptive and more accurate over time.

The result is a scoring engine that does not simply react to fraud. It anticipates identifying subtle warning signs long before a dispute, chargeback, or issuer decline ever reaches the merchant.

The Types of Predictive Fraud Models PSPs Now Rely On

Modern fraud prevention isn’t powered by one model or one logic layer. PSPs use several analytical approaches at the same time, each designed to spot a different type of suspicious behaviour. Together, they create a layered intelligence system that responds faster and more accurately than the rule engines merchants relied on a decade ago.

Unlike static rules, these models continue learning as transaction patterns evolve. That makes them far more resilient in a world where fraud behaviour shifts constantly across cards, instant payments and A2A rails.

- Behavioural Modelling

Behavioural models examine how a customer behaves during a session rather than only what they enter. They analyse typing rhythm, navigation patterns, the pace of actions and whether the journey resembles a real human or an automated script. These models are especially helpful in spotting account takeover attempts, scripted attacks or users trying to mimic legitimate customers.

- Device Intelligence Models

Every device leaves a signature a combination of hardware, software configuration and connection characteristics. PSPs use device models to understand whether a new transaction comes from a trusted device or one connected to known fraud clusters. When fraud rings reuse devices across multiple identities, device models expose these links long before a chargeback arrives.

- Velocity and Sequence Models

Fraud rarely happens in isolation. Velocity models look for rapid-fire attempts, repeated failures, unusually fast browsing or inconsistencies in user movement. Sequence models go deeper by analysing the order of actions. They detect behaviour such as multiple test transactions, pattern probing, or subtle changes that occur just before the fraudster initiates a high-value payment.

- Anomaly Detection and Outlier Scoring

This is one of the most powerful tools for PSPs. Instead of predicting specific behaviours, anomaly models look for anything that falls outside the normal transaction pattern. If a payment behaves differently from the PSP’s historical data even in ways humans wouldn’t notice the model flags it instantly. Anomaly detection is invaluable for emerging fraud types that have no historical reference.

- Graph and Network Models

Some fraud attempts involve networks of related accounts, devices or identities. Graph models map these links, helping PSPs uncover fraud rings rather than isolated attempts. These models are particularly relevant in high-risk verticals like iGaming, trading and marketplaces, where attackers often operate in coordinated groups.

- Hybrid Models Used for Regulatory Explainability

Because regulators expect clear justification for automated decisions, PSPs often combine AI models with transparent rule layers. These hybrid systems offer the accuracy of machine learning while retaining the auditability required under PSD3, the FCA guidelines and other supervisory frameworks. It allows PSPs to explain why a transaction was blocked, challenged or approved a key expectation in 2026.

Predictive AI vs Legacy Rule Engines: Why Rules Fail in 2026

Legacy fraud systems were built for a different era one where fraud patterns evolved slowly, cross-border traffic was limited, and merchants relied heavily on cards alone. In 2026, that world no longer exists. Fraud moves quickly across multiple payment rails, digital identity has become more fluid, and attackers now experiment with micro-transactions to find weaknesses. Static rule engines cannot keep up with this speed or complexity.

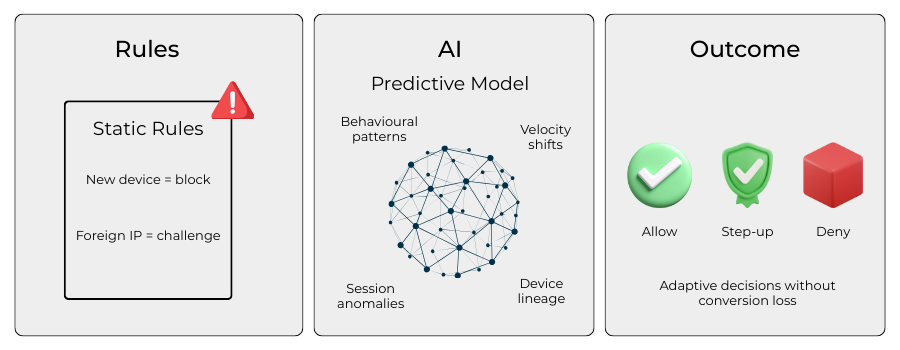

Predictive AI, by contrast, adapts in real time. It learns from changing behaviour, isolates subtle risk indicators and responds before fraud escalates into a chargeback or issuer decline. To understand why PSPs are shifting away from old systems, it helps to look at the fundamental differences between rules-based screening and modern predictive modelling.

The Limits of Fixed Thresholds and Static Logic

Rules operate on hard boundaries. For example, a merchant may block all transactions from a particular IP range or flag every high-value purchase for manual review. These approaches work when attacks follow predictable patterns, but modern fraud rarely does. Fraudsters test thousands of combinations, shift device signatures and mimic legitimate behaviour until they find a threshold that lets them through.

Static rules cannot adjust on the fly, which leads to two predictable failures: allowing sophisticated fraud through and incorrectly blocking genuine customers. For high-risk merchants, the cost of these misclassifications is substantial.

Why Predictive Models Adapt Better to New Fraud Patterns

Predictive AI takes a different path. Instead of enforcing fixed logic, it analyses transactions in context: how they compare to previous user behaviour, whether they fit historical merchant patterns, and whether the device or journey resembles known fraud sequences. The model evolves as new data flows in, updating its judgment each time fraud is confirmed or reversed.

Because the model learns continuously, PSPs are able to stay ahead of emerging attacks rather than reacting after the fact. This is crucial at a time when fraud groups experiment daily with new approaches across instant-payment systems, wallets and A2A flows.

Reducing False Positives by Understanding User Intent

One of the major weaknesses of legacy rules is their tendency to misinterpret unusual-but-legitimate behaviour as fraud. A customer logging in from a new device, travelling abroad, or using a foreign BIN may appear risky through a rules-only lens. Predictive models examine the broader context device fingerprint, behavioural rhythm, past interactions and distinguish between harmless anomalies and real threats.

This refinement leads to fewer false declines, higher approval rates and better issuer alignment, especially when paired with strong authentication.

Improving Real-Time Decisioning at Checkout

The checkout moment is where PSPs must balance fraud control and conversion. Rules often create unnecessary friction because they cannot weigh multiple factors simultaneously. Predictive AI can. It evaluates hundreds of signals in milliseconds and delivers a risk score that influences whether to challenge the customer, route differently, or approve immediately.

For merchants, this means fewer abandoned carts and fewer frustrated customers at the point of payment one of the most sensitive stages of the user journey.

Hybrid Systems for Compliance and Auditability

Even with advanced AI, PSPs cannot rely on machine learning alone. Regulators such as the FCA and EU supervisors expect fraud decisions to be explainable and justified, especially when transactions are declined or challenged. Predictive systems therefore, run alongside structured rule layers, allowing PSPs to explain the decision pathway clearly.

This hybrid approach combines the accuracy of AI with the transparency required by PSD3 and national regulators, giving PSPs the best of both worlds: strong fraud detection and full audit readiness.

How Transaction-Level AI Reduces Chargebacks

Chargebacks are often treated as a back-office cost something to be managed after the fact through dispute teams and evidence packs. In reality, most of the damage is already done by the time a chargeback arrives. Funds are locked up, operational time is spent on representment, and issuer relationships can be strained if ratios stay high for too long. For PSPs working with high-risk merchants, this is not sustainable.

Transaction-level AI changes the timeline. Instead of reacting to disputes, PSPs are able to stop many of the underlying transactions before they ever hit the chargeback stage. The models work at the moment of authorisation, scoring each attempt on its likelihood of becoming fraud or misuse in the future. If the risk is high, the PSP can block, step up, or route differently long before the issuer has to raise a dispute.

Moving prevention upstream

One of the biggest shifts is how early decisions are made. In older setups, fraud teams might review suspicious activity in batches after settlement, refine rules, and hope to catch similar attacks next time. By then, the first wave of chargebacks is already in motion.

With transaction-level AI, the PSP evaluates signals that correlate strongly with later disputes:

- Sudden changes in device, location or behaviour

- Connections to known fraud clusters

- Patterns that historically lead to “fraud” or “goods not received” claims

- Traces of scripted or automated behaviour

The model is trained on past chargeback data, so it learns the journey that typically precedes a dispute. When a similar journey appears again, the system intervenes in real time.

For merchants, the effect is visible in two places: fewer obvious fraud transactions getting through, and fewer “surprise” chargebacks weeks later. For PSPs, it means lower scheme ratios, fewer high-risk flags and far more predictable economics.

Rather than treating chargebacks as an unavoidable cost of doing business, transaction-level AI allows PSPs to treat them as a controllable outcome one that can be materially improved simply by making better decisions at the exact moment each payment is attempted.

False Declines: The Silent Revenue Killer & How AI Fixes It

Most conversations about fraud focus on what merchants lose when attackers succeed. But in 2026, an equally damaging issue often sits in the background: false declines. These are legitimate customers who are blocked because their behaviour looks unusual, their device doesn’t match previous patterns, or an issuer misinterprets a risk signal. For high-risk merchants, false declines quietly erode revenue, push customers to competitors and reduce lifetime value far more than most fraud attacks ever could.

The difficulty for PSPs is that false declines rarely look dramatic. They appear in reporting as “insufficient authentication” or “issuer suspicion,” even when the customer ultimately proves genuine. What makes them particularly dangerous is timing: the customer abandons the session in frustration, and the loss is immediate and permanent. It only takes a small percentage of these outcomes to create a meaningful dent in merchant performance.

Where AI changes the trajectory

Transaction-level AI helps PSPs avoid these costly mistakes by assessing context, not just raw signals. A traditional rule engine might interpret a new device, a foreign IP address or an unusual spending pattern as red flags. AI looks at the broader picture: the customer’s past behaviour, their navigation flow during the session, the consistency of their device attributes, and whether their journey resembles genuine human activity rather than scripted behaviour.

Because the model understands nuanced patterns, it can differentiate between a genuine customer travelling abroad and a fraudster masking their identity. The result is fewer unnecessary SCA challenges, fewer panic declines from issuers and more approvals on the first attempt.

Another advantage is how AI strengthens the data PSPs send during authorisation. Banks respond more positively when a transaction is accompanied by device context, behavioural insight and strong identity signals. In practice, this means fewer declines marked as “high-risk” or “suspected fraud,” simply because the issuer has more confidence in the legitimacy of the transaction.

For merchants, the impact is tangible: higher conversion at checkout, fewer abandoned carts and a smoother customer experience that feels more predictable even across high-risk payment corridors.

Real-Time AI Across Multi-Rail Payments (Cards, A2A, Instant, Wallets)

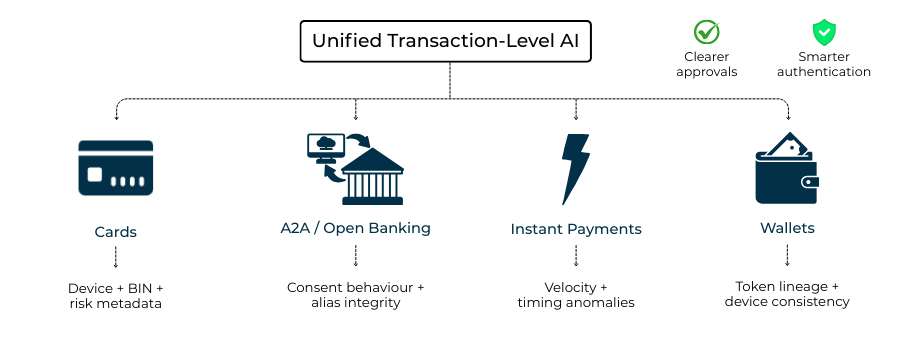

As merchants broaden their payment options, PSPs face a new challenge: fraud no longer behaves the same way across different rails. The signals that indicate a risky card-not-present transaction are not the same signals that expose instant-payment scams, and neither resembles the patterns seen inside digital wallets or recurring A2A flows.

Real-time AI gives PSPs a single intelligence layer that adapts to these differences without forcing merchants to manage separate fraud tools for each payment method.

Modern PSPs rely on this unified scoring system to maintain consistent decisioning, regardless of whether the customer pays with a saved card token, initiates an account-to-account payment, or uses a mobile-wallet credential that never exposes raw account data. This flexibility is vital in 2026, as payment stacks become more fragmented, consumer expectations rise, and fraud tactics migrate between rails at unprecedented speed.

How fraud signals differ across payment rails

Card transactions generate a rich set of historical and behavioural signals: BIN ranges, issuer tendencies, AVS outcomes, token lifecycle data and 3DS history. These help AI models understand when a card attempt deviates from a customer’s norm.

A2A payments, by contrast, shift the focus to consent patterns, VRP behaviour and bank-app authentication journeys. Instant-payment systems such as UPI or PayNow introduce their own risk markers, including unusual alias activity, rapid request patterns or abnormal device–account linkages.

Wallets add yet another layer: device tokens, biometric authentication history and in-app behavioural patterns. Transaction-level AI processes these differences simultaneously, ensuring that each rail is evaluated according to the signals that matter most.

Why unified scoring matters for PSPs

A common scoring engine prevents fragmentation a problem many merchants face when each rail uses a separate fraud model with inconsistent logic. With unified AI, the PSP learns from every rail and applies those insights across the network. For example, behavioural anomalies detected in a wallet transaction may inform how the model interprets subsequent A2A attempts from the same device.

This cross-rail intelligence is particularly effective against fraud rings that test multiple payment types in quick succession. Instead of treating these attempts as unrelated events, the model views them as part of a single behavioural pattern, intervening early when needed.

Strengthening approval and authentication performance

Real-time AI not only reduces fraud but also helps PSPs optimise authentication decisions across rails. For card transactions, a strong risk score can increase frictionless 3DS success, reducing issuer friction and improving approval rates. For A2A and instant payments, AI can identify when additional verification may be required, or when a request fits the customer’s normal pattern and should proceed seamlessly.

By adapting scoring to the rail, PSPs move beyond one-size-fits-all risk management and deliver a payment experience that is both safer and smoother for customers particularly in high-risk or cross-border corridors.

Balancing Fraud Detection and Conversion: The PSP Trade-Off Model

Every PSP faces the same dilemma: tighten controls to prevent fraud, or relax them to protect conversion. In reality, both objectives matter, and both must be managed simultaneously. Too much friction pushes genuine customers away; too little oversight invites chargebacks and scheme violations. The difficulty comes from distinguishing between real threats and unusual but legitimate behaviour something legacy rule engines handle poorly. Transaction-level AI gives PSPs the ability to maintain this balance in real time, adjusting decisions based on the risk level of each individual transaction.

Why rigid rules create conversion damage

Rules operate on simple logic: “if X happens, then block.” This can work in narrow scenarios, but it doesn’t account for the diversity of genuine customer journeys. Travel, cross-border commerce, mobile-first behaviour and new devices all create patterns that rules often misinterpret as fraud. The result is a decline, a lost customer and a frustrated merchant. In high-growth sectors, these unnecessary declines can represent the largest source of revenue leakage.

Predictive AI avoids this mistake by scoring behaviour holistically. Instead of reacting to one isolated signal a new IP address or a foreign BIN it considers the broader context and determines whether the transaction still fits a genuine pattern. This reduces false positives without increasing fraud exposure.

Dynamic risk scoring at the moment of checkout

Where rules treat every deviation as a threat, AI models distinguish between low-risk anomalies and high-risk signals that justify additional security. PSPs use these scores to guide authentication and routing choices. A low-risk transaction can flow through frictionless SCA, while a borderline case may require step-up verification. High-risk attempts can be blocked or diverted before reaching the issuer.

This dynamic scoring helps PSPs avoid two damaging extremes: over-authentication, which frustrates customers, and under-authentication, which leads to issuer declines or chargebacks. The model adapts continuously, learning from corridor-specific tendencies, SCA outcomes and historical dispute data.

When conversion and security reinforce each other

With transaction-level AI, better fraud detection often leads directly to better conversion. Issuers approve more consistently when they receive richer signals: device fingerprints, behavioural context, token lifecycle data and risk-aligned metadata. This reduces “soft declines” and improves authorisation performance across domestic and cross-border corridors.

For merchants, the experience translates into fewer abandoned sessions, fewer surprise declines and a more predictable payment journey. For PSPs, it restores the balance between risk and revenue a balance that static rules simply cannot achieve.

Regulatory Requirements Shaping PSP Fraud Models in 2026

Regulators worldwide are tightening expectations around fraud detection, transparency and risk-based authentication. For PSPs, this means transaction-level AI is no longer a competitive advantage it is becoming a compliance expectation. The regulatory direction is consistent across Europe, the UK and several APAC markets: PSPs must demonstrate proactive monitoring, explainable decision-making and stronger safeguards against evolving scam patterns.

The EU’s PSD3 and PSR push for proactive fraud intelligence

The European Union’s upcoming PSD3 and Payment Services Regulation (PSR) reshape the obligations placed on PSPs. Both frameworks emphasise early detection of suspicious activity, robust monitoring of behavioural patterns and tighter alignment between PSPs and issuers on fraud-data sharing.

A key shift is the expectation that PSPs adopt risk-based authentication, with SCA applied when needed rather than by default. Transaction-level AI supports this by identifying when a payment is low-risk and can safely pass frictionlessly, and when additional checks are justified. PSPs that depend purely on rule-driven systems will struggle to meet this new standard.

The FCA’s stance on explainable AI and scam prevention

In the UK, the Financial Conduct Authority (FCA) continues to push PSPs towards greater accountability in automated decisions.

The FCA expects financial institutions to demonstrate that AI-driven decisions are transparent, explainable and auditable. This is particularly relevant to APP scam prevention, where PSPs must show they applied reasonable detection measures at the point of payment. Transaction-level AI supports these expectations by offering a structured risk assessment for each transaction, backed by traceable logic rather than opaque rule combinations.

APAC regulators and real-time fraud monitoring mandates

In Asia, regulators have already moved towards real-time behavioural scoring. India’s RBI maintains strict oversight of UPI, mandating continuous monitoring and anomaly detection for participating banks and PSPs.

Singapore’s Monetary Authority of Singapore (MAS) has introduced strong safeguards across PayNow and digital banking, emphasising proactive scam detection and immediate response protocols.

These frameworks reinforce a consistent message: real-time fraud intelligence must be baked into the payment flow, not added as a separate layer.

Why compliance and AI are converging

Across these jurisdictions, the direction is clear. Regulators want PSPs to use technology capable of analysing behaviour, predicting anomalies and responding instantly. Transaction-level AI fits directly into this model. It provides faster, more accurate detection, reduces unnecessary friction and offers decision trails that satisfy supervisory expectations. As 2026 approaches, PSPs that cannot demonstrate this level of intelligence face a higher risk of regulatory pressure, scheme scrutiny or operational loss.

Regulatory Requirements Shaping PSP Fraud Models in 2026

Regulators worldwide are tightening expectations around fraud detection, transparency and risk-based authentication. For PSPs, this means transaction-level AI is no longer a competitive advantage it is becoming a compliance expectation. The regulatory direction is consistent across Europe, the UK and several APAC markets: PSPs must demonstrate proactive monitoring, explainable decision-making and stronger safeguards against evolving scam patterns.

The EU’s PSD3 and PSR push for proactive fraud intelligence

The European Union’s upcoming PSD3 and Payment Services Regulation (PSR) reshape the obligations placed on PSPs. Both frameworks emphasise early detection of suspicious activity, robust monitoring of behavioural patterns and tighter alignment between PSPs and issuers on fraud-data sharing.

A key shift is the expectation that PSPs adopt risk-based authentication, with SCA applied when needed rather than by default. Transaction-level AI supports this by identifying when a payment is low-risk and can safely pass frictionlessly, and when additional checks are justified. PSPs that depend purely on rule-driven systems will struggle to meet this new standard.

The FCA’s stance on explainable AI and scam prevention

In the UK, the Financial Conduct Authority (FCA) continues to push PSPs towards greater accountability in automated decisions.

The FCA expects financial institutions to demonstrate that AI-driven decisions are transparent, explainable and auditable. This is particularly relevant to APP scam prevention, where PSPs must show they applied reasonable detection measures at the point of payment. Transaction-level AI supports these expectations by offering a structured risk assessment for each transaction, backed by traceable logic rather than opaque rule combinations.

APAC regulators and real-time fraud monitoring mandates

In Asia, regulators have already moved towards real-time behavioural scoring. India’s RBI maintains strict oversight of UPI, mandating continuous monitoring and anomaly detection for participating banks and PSPs.

Singapore’s Monetary Authority of Singapore (MAS) has introduced strong safeguards across PayNow and digital banking, emphasising proactive scam detection and immediate response protocols.

These frameworks reinforce a consistent message: real-time fraud intelligence must be baked into the payment flow, not added as a separate layer.

Why compliance and AI are converging

Across these jurisdictions, the direction is clear. Regulators want PSPs to use technology capable of analysing behaviour, predicting anomalies and responding instantly. Transaction-level AI fits directly into this model. It provides faster, more accurate detection, reduces unnecessary friction and offers decision trails that satisfy supervisory expectations. As 2026 approaches, PSPs that cannot demonstrate this level of intelligence face a higher risk of regulatory pressure, scheme scrutiny or operational loss.

Case Studies: How Different Industries Apply Transaction-Level AI

Fraud behaves differently across industries. A subscription platform does not face the same risks as an FX broker, and a marketplace cannot rely on the same signals used by an iGaming operator. Transaction-level AI works precisely because it adapts to each environment, learning the patterns that matter for every merchant type and every payment rail. The examples below illustrate how PSPs apply predictive models in real, diverse scenarios.

Case Study A: European FX Platform (Device Switching & High-Velocity Behaviour)

A European FX brokerage experienced recurring fraud attempts involving rapid device changes and repeated low-value test deposits. Traditional rules flagged some of the activity but failed to identify the deeper pattern.

Transaction-level AI made the difference by linking related devices, spotting abnormal speed between actions and identifying the behavioural rhythm typical of automated scripts. The model highlighted risky journeys early, enabling the PSP to block suspicious deposits before they escalated into larger unauthorised trades. For a business where identity stability is critical, this shift brought greater control over a risk pattern that had previously been difficult to manage.

Case Study B: Global Marketplace (Graph Intelligence for Buyer-Seller Networks)

Marketplaces face a unique challenge: threats arise not only from buyers but from sellers who attempt to manipulate ratings, listings or payouts. In this case, a large multi-category marketplace partnered with a PSP to investigate a cluster of disputes linked to a small subset of sellers.

Predictive AI played a central role by using graph modelling to map the hidden relationships between accounts, devices and purchase patterns. The network revealed coordinated activity across multiple regions something that rule engines had never detected. Once identified, the PSP and marketplace applied targeted interventions without disrupting genuine activity, demonstrating how AI can uncover behaviour that exists beneath surface-level transactions.

Case Study C: European iGaming Operator (First-Party Misuse Patterns)

An iGaming operator in Europe struggled with disputes that were legitimate purchases later claimed as unauthorised. Because customer journeys in gaming tend to be fast and mobile-heavy, static rules were unable to distinguish genuine players from deliberate misuse.

The PSP deployed behavioural models that analysed session flow, in-game navigation and device consistency. The result was not a blanket tightening of controls but a more accurate distinction between legitimate but unusual behaviour and risky journeys associated with misuse. The operator gained greater stability in dispute outcomes and a clearer understanding of players’ behavioural signals.

Case Study D: Subscription Platform (Reducing False Declines)

A subscription video service confronted a different issue entirely: high levels of false declines when customers updated cards or switched devices. These declines were not fraudulent but misinterpreted signals, creating unnecessary churn.

By analysing the continuity of user behaviour, device fingerprints and historical metadata, transaction-level AI provided issuers with more confidence during authorisation. Instead of applying blunt rules, the PSP delivered richer signals that enabled frictionless approvals even when the payment context changed. The subscription platform saw more predictable billing cycles and fewer involuntary cancellations.

Case Study E: LATAM PSP (Instant-Payment Fraud Patterns Across Alias Systems)

A LATAM PSP processing instant-payment traffic faced distinctive challenges: alias-based identity, mobile-first journeys and highly dynamic velocity patterns. Fraud attempts were not large in value but frequent, unpredictable and difficult to track with rules.

The PSP introduced predictive models trained on device lineage, alias reuse, timing patterns and behavioural consistency. The AI recognised subtle signs of unsafe alias transitions or suspicious device swapping patterns that rule engines failed to catch. Instead of tightening controls for everyone, the PSP applied precise risk responses that preserved conversion while reducing exposure on high-risk transactions in real time.

Where AI Lives Inside the PSP Stack (Cascading Risk Rules + Orchestration Integration)

PSPs increasingly view fraud intelligence as part of the core transaction flow, not an external add-on. As a result, AI models now sit inside the payment stack itself, influencing decisions before authentication, before routing and even before the transaction is submitted to the issuer or bank. This is a major shift from older architectures, where fraud checks operated after authorisation or as an isolated, rule-based service. In 2026, the entire payment lifecycle is shaped by real-time scoring from checkout all the way to the issuer response.

AI at the pre-authorisation stage: the earliest intervention point

The first layer of intelligence appears the moment a customer moves through the checkout journey. AI models analyse device characteristics, behavioural signals, session flow and historical patterns to determine whether the attempt resembles genuine activity. This happens before 3DS, before SCA and before any call to an acquirer or bank.

At this stage, PSPs often combine AI with a curated rule layer known as cascading risk rules. These rules interpret the AI score and apply the appropriate action: allow, challenge, step up, hold or block. The cascading structure prevents over-reliance on any single signal, making decisions more consistent and easier to audit.

How orchestration engines interpret AI scores

Once the model produces a risk score, the orchestration engine takes over. Its role is not only to route transactions optimally but also to apply routing decisions that reflect the risk level of each attempt. For low-risk transactions, the engine may choose a frictionless 3DS pathway or a preferred acquirer corridor. For borderline attempts, it may trigger SCA or route to an acquirer that historically handles this risk profile more favourably.

For A2A and instant-payment flows, orchestration relies on similar guidelines, applying the AI score to determine whether additional verification is required before sending the request to the customer’s bank. The result is a payment journey tuned to both performance and safety, something static routing cannot achieve.

Real-time feedback loops that improve both fraud and conversion

An important advantage of embedding AI into the PSP stack is the feedback process. Outcomes from authentication, issuer responses and customer disputes feed back into the AI model, improving its judgment over time. The orchestration engine benefits from this too; it learns which routes are more approval-friendly for specific risk profiles, which acquirers handle certain BIN ranges better and how different authentication strategies influence acceptance across regions.

This integrated loop creates a system where fraud detection and conversion optimisation inform each other continuously. Instead of reactive updates, the payment stack evolves automatically based on new patterns and merchant outcomes giving PSPs a level of intelligence that legacy rule-driven platforms cannot match.

The Merchant KPIs Most Impacted by Transaction-Level AI

When PSPs shift from legacy rules to transaction-level AI, the impact isn’t limited to fraud ratios or dispute numbers. The entire performance profile of a merchant begins to change. Approval rates stabilise, SCA friction reduces, false declines drop and corridor-level acceptance becomes more predictable. These improvements translate directly into better revenue, smoother customer journeys and healthier acquirer relationships. AI helps merchants move away from reactive firefighting and toward a more consistent and resilient operating model.

Chargeback and dispute ratios

Chargebacks are one of the first metrics to improve. Because AI intervenes at the authorisation stage blocking risky transactions long before they escalate into disputes merchants experience fewer downstream losses. The reduction isn’t driven by “stricter rules” but by better targeting, enabling PSPs to focus controls on genuinely suspicious journeys rather than casting wide nets that harm conversion.

False-decline rate and issuer alignment

A major commercial benefit comes from fewer false positives. Transaction-level AI gives issuers richer, more reliable signals: device attributes, behavioural continuity and identity history tied to network or device tokens. These signals help issuers build confidence in the transaction, resulting in fewer “soft declines” and fewer customers being turned away unnecessarily. For merchants, this often creates a visible lift in approval stability, particularly across high-risk BIN ranges and cross-border corridors.

SCA frictionless success and authentication outcomes

AI’s predictive scoring directly supports risk-based SCA under PSD2 and PSD3. If the model determines that a transaction poses low risk, the PSP can request frictionless authentication improving the customer experience without compromising safety.

Better authentication outcomes lead to three visible improvements:

- A higher proportion of frictionless 3DS approvals

- Fewer step-ups that frustrate genuine customers

- Stronger issuer trust in the PSP’s risk signals

These gains ultimately reflect in higher end-to-end conversion.

Corridor performance and approval consistency

Fraud patterns vary widely by geography. Transaction-level AI helps merchants understand how risk behaves across specific corridors and BIN clusters. By analysing approval volatility and behavioural anomalies, PSPs can adjust routing, SCA strategies and issuer metadata to stabilise acceptance in historically challenging regions. Over time, merchants see fewer unexpected drops in performance a critical advantage for those operating in high-risk or multi-market environments.

Cost per successful transaction

Every unnecessary decline, manual review or dispute adds cost to the transaction lifecycle. AI reduces these penalties by improving the quality of traffic sent to issuers and banks, cutting avoidable friction and reducing operational overhead. The result is a leaner, more efficient payments stack, where more transactions convert successfully on the first attempt with fewer manual interventions.

A Practical Roadmap for PSPs Deploying Transaction-Level AI

Shifting from rule-heavy fraud engines to predictive, transaction-level AI is not a simple software upgrade. It requires PSPs to rethink the structure of their payment stack, the quality of data they collect and the way fraud and authentication decisions interact. For many providers, the transition is gradual: a series of controlled steps that allow machine learning models to evolve safely without destabilising approval rates. What matters is having a roadmap that blends technical readiness with operational maturity.

Strengthening the data foundation

Every AI model is only as effective as the data it learns from. PSPs typically begin by consolidating customer journey signals, device fingerprints, authentication outcomes and issuer responses into a unified, structured dataset. This is often the longest stage because many PSPs still operate on legacy or fragmented data architecture. Once the data is centralised, the model can learn from patterns across merchants, corridors and payment rails producing scores that reflect the full context of each transaction.

Introducing models in controlled stages

The first deployment phase usually targets pre-authorisation scoring. PSPs introduce AI models alongside existing rules so they can compare outcomes and build trust in the model’s predictions. Over time, the AI layer takes on more responsibility, informing SCA decisions, routing logic and risk thresholds. The shift is incremental, allowing teams to spot drift, monitor anomalies and adjust inputs before moving to real-time, full-scale usage.

This approach ensures that performance does not degrade during the transition. Instead, PSPs gradually replace static rules with predictive scoring, backed by continuous validation.

Building explainability and compliance into the workflow

Regulators increasingly expect PSPs to justify automated decisions, particularly when they lead to declines or stepped-up authentication. For this reason, transparency is mandatory. PSPs embed explainability frameworks that show how the model concluded, highlighting key signals without exposing sensitive algorithms.

This ensures compliance with supervisory expectations under PSD3, the FCA’s guidelines and similar frameworks across APAC. It also gives merchants greater confidence that the system is acting fairly and consistently across their customer base.

Continuous optimisation through feedback loops

Once the system is live, outcomes from issuers, 3DS flows and merchant disputes feed directly into the model’s learning process. This continuous feedback loop allows the AI to adjust as fraud patterns shift, customer behaviour evolves or new payment rails emerge. Over time, the PSP’s fraud engine becomes more precise, its routing more efficient and its authentication decisions more aligned with issuer expectations.

This combination of strong data foundations, gradual rollout, explainability and feedback forms the backbone of a sustainable deployment strategy. PSPs that follow this roadmap gain the benefits of AI without disruption, setting the stage for more advanced models in the years ahead.

The Future: Federated AI Models Across PSPs, Schemes and Acquirers

As PSPs refine their transaction-level AI, the next frontier isn’t simply better modelling it’s collaboration. Fraud patterns are now global, shared across multiple providers, issuers and networks. A fraud ring that probes one PSP on Monday may attack an acquirer in another region on Tuesday. That reality is pushing the industry toward a more collective approach, where intelligence moves beyond individual institutions and into shared, privacy-safe ecosystems.

The concept gaining traction is federated AI: systems that allow different organisations to benefit from shared learning without ever exposing raw customer data. Instead of centralising sensitive information, each participant trains a model locally. The models then contribute to a global network that learns from aggregated patterns while maintaining regulatory and data-protection boundaries.

Why federated intelligence is becoming inevitable

Fraud evolves faster than any single PSP can monitor alone. Federated models create a broader view capturing insights from multiple geographies, payment rails and merchant types. When an attacker tests stolen credentials on one platform, the signal can inform risk decisions across the wider network. The advantage is two-fold: PSPs gain protection from threats they have never seen before, and acquirers benefit from richer, cross-rail intelligence that improves approval consistency.

This model also aligns with regulator expectations around collaboration. Under PSD3 and emerging frameworks across APAC, there is increasing emphasis on information-sharing, structured reporting and earlier detection of coordinated attacks. Federated AI supports this direction without compromising the strict privacy standards imposed by data-protection law.

The most compelling benefit is scale. Once these models mature, fraud detection becomes less about reacting to isolated incidents and more about anticipating patterns that are forming across the global payments landscape. For PSPs, this represents a shift from local defence to collective intelligence an evolution that could define the next decade of fraud prevention.

Conclusion

Fraud prevention is no longer about looking for obvious red flags or reacting to chargebacks after the damage is done. In 2026, PSPs operate in an environment where fraud patterns shift quickly, customers move between payment rails without friction and regulators expect institutions to prove they are taking proactive, data-driven measures to protect consumers. Transaction-level AI sits at the centre of this evolution.

By analysing each payment attempt in context rather than relying on fixed rules or historical assumptions PSPs can detect subtle risks early, support frictionless authentication and reduce both fraud losses and false declines. The benefits extend beyond compliance. Merchants gain more predictable performance, issuers receive richer and more trustworthy signals, and customers encounter smoother payment journeys across cards, A2A, instant payments and wallets.

The most significant shift is strategic: fraud management is no longer an isolated function but a core part of how PSPs design and operate their payment stack. With AI informing routing, authentication, scoring and orchestration outcomes, the entire transaction lifecycle becomes more intelligent and adaptive. As federated models emerge and collaborative intelligence grows across networks, PSPs will move into an era where fraud can be anticipated rather than merely contained.

Transaction-level AI is not simply a new tool it is the infrastructure supporting the next generation of global payment security.

FAQs

1. What is transaction-level AI in payments?

Transaction-level AI is a fraud-detection approach where each payment is analysed individually in real time, using behavioural, device and session signals to predict risk before authorisation. Unlike rules, which react to fixed conditions, AI adapts to changing fraud patterns and identifies subtle anomalies. PSPs use transaction-level AI to reduce chargebacks, limit false declines and provide issuers with higher-quality authentication signals, particularly across multi-rail payment environments.

2. How does AI help PSPs reduce chargebacks?

AI reduces chargebacks by identifying high-risk journeys before the authorisation request is sent. It highlights behaviours that historically correlate with disputes sudden device changes, abnormal session flows, or links to known fraud clusters. By blocking or challenging these transactions early, PSPs prevent the downstream disputes that drive chargeback ratios. This shift from post-dispute management to pre-authorisation prevention has become essential for high-risk sectors.

3. Why are false declines such a major issue for merchants?

False declines occur when genuine customers are incorrectly flagged as suspicious. They often happen because rule engines lack context: a new device, foreign travel or a pattern change may appear risky even when legitimate. Transaction-level AI reduces false positives by analysing behaviour holistically and providing issuers with richer identity and device signals. Fewer false declines mean higher approval rates, fewer abandoned carts and improved customer retention.

4. What types of fraud models do PSPs use?

PSPs use several model types together. Behavioural models analyse how a user interacts with the checkout. Device-intelligence models look at hardware and software signals. Velocity and sequence models track the pace and order of actions. Anomaly-detection models identify deviations from normal behaviour. Graph-analysis models map links across devices and accounts. This layered approach ensures that PSPs catch sophisticated fraud while avoiding over-blocking genuine traffic.

5. How does AI improve SCA and 3DS outcomes?

AI helps PSPs determine when a transaction should proceed frictionlessly and when SCA is truly necessary. Low-risk transactions receive frictionless 3DS requests supported by stronger metadata, giving issuers more confidence to approve. High-risk transactions trigger step-up authentication before reaching the issuer. This ensures authentication effort is applied only where needed, improving both conversion and regulatory alignment under PSD2 and PSD3.

6. Can transaction-level AI be used for A2A and instant payments?

Yes, Fraud patterns in A2A and instant payments differ from cards, but the same principles apply. AI assesses consent behaviour, alias transitions, device consistency and timing patterns to identify abnormal activity. In instant-payment systems (such as UPI or PayNow), where settlement is immediate, real-time scoring is essential to prevent scams and account misuse. PSPs increasingly rely on unified models that score all rails consistently.

7. Is AI fraud detection required by regulators?

Regulators do not mandate specific technologies, but they expect PSPs to implement proactive, risk-based systems. Under PSD3/PSR in the EU, institutions must strengthen real-time monitoring and fraud-data sharing. The FCA emphasises explainable decisions and scam-prevention controls. In APAC, the RBI and MAS require continuous behavioural monitoring for instant payment systems. AI isn’t mandatory but it is quickly becoming the only viable way to meet these expectations.

8. Can AI models explain why a transaction was declined?

Modern AI fraud engines incorporate explainability frameworks that highlight the key risk indicators behind each decision. This is important for PSP compliance because regulators expect declines and step-ups to be justifiable. While the underlying model remains complex, PSPs can surface clear reasons for unusual device signatures, risky behavioural sequences, or links to fraud clusters without exposing model internals or sensitive data.

9. How does AI integrate with payment orchestration?

AI and orchestration now work together. The model scores each transaction, and the orchestration engine interprets the score to choose the best authentication pathway or routing corridor. Low-risk traffic may follow a frictionless SCA route, while borderline cases may use different acquirers or require step-up verification. This coordination improves both approval rates and fraud prevention, especially across multi-rail environments.

10. Can AI help detect first-party misuse?

Yes, first-party misuse is difficult to identify because the behaviour resembles genuine customers until a dispute is filed. Transaction-level AI analyses unusual session patterns, inconsistent device behaviour and hints of scripted activity that typically precede such claims. By spotting these signals early, PSPs can add verification steps or adjust risk scoring before completing the transaction, reducing exposure to later misuse.

11. What data does transaction-level AI rely on?

AI models use a blend of behavioural signals (navigation patterns, typing cadence), device intelligence (hardware configuration, app data), session context (IP stability, location shifts), transaction metadata (BIN, MCC, corridor) and authentication outcomes. No single signal defines risk it is the relationship between them that enables accurate scoring. PSPs continuously feed model feedback from issuers and disputes to ensure the system adapts to new patterns.

12. What is the future of AI in fraud detection?

The next major shift is toward federated intelligence models trained across multiple PSPs, acquirers and networks without sharing raw customer data. This approach helps detect coordinated attacks earlier and strengthens industry-wide resilience. Combined with smarter orchestration, richer metadata and risk-aligned routing, AI will move from being a fraud-prevention tool to a core part of how the global payments ecosystem manages trust.