Fraud in 2026 no longer behaves like a series of isolated attacks that merchants can block with static rules, velocity counters or simple blacklist logic. Instead, fraud has matured into a real-time, adaptive ecosystem, one that learns faster, moves faster and disguises itself more effectively than the tools most merchants still rely on. In high-risk verticals especially, fraudsters blend legitimate device patterns with AI-generated identities, automate behavioural signals and exploit gaps between onboarding, authentication and payment. By the time a transaction reaches the checkout page, many traditional defences have already failed.

This is why reactive fraud filters systems that trigger only after a checkout attempt or decline event are no longer sufficient. Fraud prevention has shifted upstream, towards predicting intent before the customer reaches the payment button. Merchants are increasingly relying on behavioural analytics, device intelligence and payment graph data to determine whether a user is trustworthy long before payment credentials appear on-screen. These models do not wait for suspicious activity; they detect the probability of fraud from interaction patterns, device lineage, identity linkage and network behaviour.

The outcome is a profound shift in how merchants control risk. Instead of blocking transactions after they fail authentication or trip a rule, predictive models evaluate the user’s likelihood of fraud during browsing, form entry and even page navigation. This pre-emptive approach does more than reduce fraud; it improves approval rates, reduces friction for legitimate customers and gives PSPs clearer risk signals long before a bank has to make a decision.In 2026, the merchants who outperform their peers are not simply filtering fraud; they are forecasting it. And they are using that foresight to build payment journeys that prioritise security without sacrificing conversion. This blog breaks down how predictive risk models work, the signals that feed them, and why they have become essential for high-risk merchants navigating an increasingly complex fraud landscape.

- What Predictive Risk Analytics Really Means in 2026

- The Modern Fraud Graph: How It Works

- The Data Inputs That Power Predictive Models

- High-Risk Vertical Use Cases: How Predictive Models Transform Performance

- The PSP Integration Layer: How Predictive Models Plug Into Merchant Systems

- Compliance, Privacy & Governance Requirements for Predictive Models

- KPIs to Measure Predictive Model Performance

- Conclusion

- FAQs

What Predictive Risk Analytics Really Means in 2026

Predictive risk analytics in 2026 represents a fundamental shift in how merchants understand and prevent fraud. Instead of waiting for a risky transaction to appear at checkout or for a rule to trigger after payment details are entered, modern risk systems now evaluate intent the moment a user begins interacting with a website or app.

Fraud is no longer treated as an event; it is treated as a behavioural pattern, and these patterns emerge long before a payment credential surfaces.

At the centre of this shift are three interconnected pillars: behavioural biometrics, device graph scoring, and payment-graph machine learning. These signals allow merchants to predict the likelihood of fraud with far greater accuracy than any post-transaction filter. They also make risk decisions faster, more contextual and significantly more aligned with how fraud actually manifests in digital environments today.

Behavioural Biometrics as a Primary Risk Signal

In 2026, behavioural biometrics had moved from niche technology to a mainstream risk indicator. Fraudsters struggle to mimic how real users type, scroll, swipe or navigate even when using sophisticated tools or stolen credentials. Modern models interpret micro-interactions such as typing cadence, cursor hesitation, pressure variation on mobile touchscreens, and even subconscious navigation loops. These signals reveal whether the person behind the screen is acting naturally or following automated or scripted behaviour.

For merchants, behavioural biometrics offer two critical benefits: they provide meaningful risk signals before checkout, and they allow legitimate users to proceed without added friction. A user demonstrating consistent, human-like behaviour is far less likely to encounter OTP challenges, 3DS friction, or unnecessary verification prompts.

Device Graph Scoring & Identity Correlation

Device intelligence has also evolved beyond fingerprinting and IP checks. In 2026, devices are mapped across a graph network that links them to past behaviours, accounts, transactions and risk outcomes. A device that appears clean at first glance may, once connected to the broader device graph, reveal links to hundreds of previous fraud attempts connections that would never surface in a traditional rule-based system.

This type of graph scoring helps merchants understand not just what a device looks like, but where it has been, who has used it, and how it behaves within the larger fraud ecosystem.

A fraudulent user cannot easily hide behind VPNs or spoofing when their device metadata betrays a pattern of connections across multiple identities, geographies or payment instruments.

Payment-Graph Machine Learning

The payment graph is the deepest layer of predictive risk analytics. It maps how users, cards, BINs, wallets, emails, devices and IP addresses interact across thousands of transactions. Fraud rarely occurs in isolation; it emerges in clusters, micro-patterns and shared identifiers. Payment-graph ML models identify these relationships and detect anomalies that are invisible to linear rule engines.

For example, a new card may look legitimate at the surface level, but if it appears in a graph cluster associated with synthetic identities, low-trust issuers or device-sharing rings, the model identifies elevated risk even before the card is entered. This ability to analyse network-wide relationships in real-time is what enables merchants to prevent fraud before the checkout form is ever reached.

The Modern Fraud Graph: How It Works

Fraud in 2026 is no longer a matter of isolated bad transactions or individual suspicious users. It operates as a network, where identities, devices, payment instruments and behavioural signals form interconnected patterns. The modern fraud graph captures these relationships and continuously updates them as new data enters the ecosystem. Instead of evaluating one transaction at a time, the fraud graph evaluates the entire structure of relationships surrounding a user’s intent.

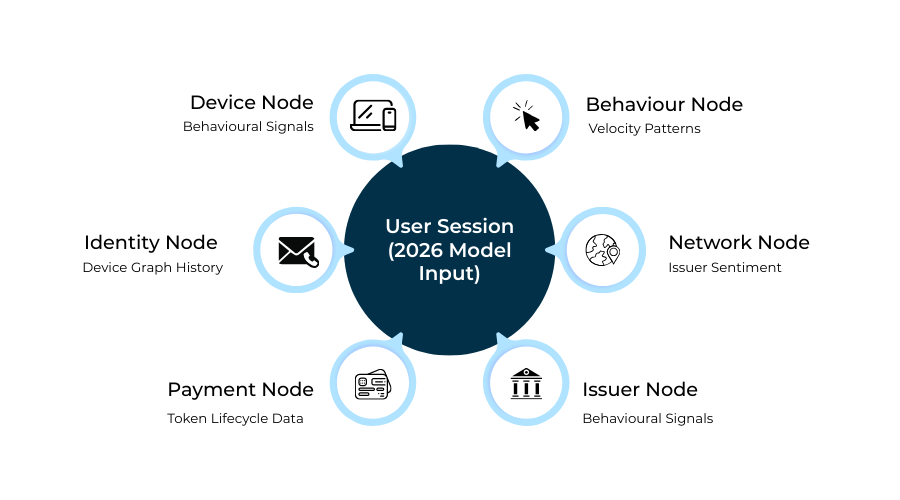

At its core, a fraud graph treats every element involved in a digital transaction device IDs, emails, phone numbers, BIN ranges, IP addresses, user behaviours, and merchant interactions as nodes. The connections between these nodes are edges that represent shared histories, repeated patterns, risk relationships or behavioural similarities. When a new user or transaction appears, the system doesn’t examine it in isolation; it evaluates how it fits into the broader graph and whether it connects to known fraud clusters or trustworthy behaviour.

The true strength of the modern fraud graph lies in its ability to detect subtle, non-linear connections that traditional rule systems cannot interpret. A device may have no obvious red flags, but if it connects indirectly to compromised accounts through shared networks or behavioural mimicry, the model can identify elevated risk. Conversely, a new user may appear unfamiliar, but if their behavioural and device footprint aligns closely with known good identities, the system can reduce friction and improve approval confidence.

Linking Identity, Device & Transaction Signals

The predictive power of the fraud graph comes from the way it blends identity, device and transaction data into a unified structure. Identity elements emails, phone numbers, names are linked to device metadata, session signals and past payment outcomes. Transaction patterns frequency, geographic behaviour, amount clustering layer additional context on top. Over time, this creates a high-resolution view of how legitimate and illegitimate users behave.

For merchants, this means risk evaluation becomes both more accurate and more forgiving. Instead of bluntly blocking users who resemble past fraud profiles, the graph evaluates the quality of each connection. A shared IP may not matter if behavioural signals indicate legitimate intent; a new device may be acceptable if its session behaviour aligns with known good patterns. This blend of risk intelligence and behavioural nuance is what allows predictive models to reduce false positives, one of the biggest revenue losses in high-risk sectors.

The modern fraud graph is not just a dataset; it is a living structure that evolves as fraud tactics evolve. Merchants who rely on this approach shift from reacting to fraud incidents to anticipating them, often long before a fraudulent user attempts a payment.

The Data Inputs That Power Predictive Models

Predictive risk systems are only as strong as the signals feeding them. In 2026, fraud prevention is no longer about monitoring a few high-level attributes such as IP address, BIN country or transaction velocity. Instead, modern models rely on a multi-layered feed of behavioural, device, network and issuer-side signals each one adding texture to the user’s risk profile. These signals are interpreted not as isolated datapoints, but as indicators of intent, which is what ultimately distinguishes a legitimate user from a fraudulent one.

The complexity comes from combining these signals in meaningful ways. A single keystroke pattern or a token refresh anomaly may not indicate fraud on its own. But when combined with unusual device lineage or issuer sentiment patterns, the likelihood of fraud becomes clearer. Predictive models operate on this layered logic: the more dimensions of data they receive, the more accurately they can separate normal behaviour from covert risk.

Behavioural Signals: Interactions That Reveal Intent

Behavioural biometrics generate some of the earliest and most reliable predictors of risk. How a user types, scrolls, taps, navigates or hesitates forms a behavioural signature that is extremely difficult for automated bots or fraud farms to mimic. Keystroke cadence, scrolling rhythm, touch-pressure variation and navigation loops offer clues about whether the user is behaving naturally or following a scripted or augmented pattern.

In high-risk sectors like gaming, forex and travel, these signals help identify fabricated identities, testing bots and pre-programmed flows long before the user enters personal information. Because these patterns are collected silently, they add no friction while providing powerful early-stage risk intelligence.

Velocity & Pattern Anomalies Across Users or Devices

Velocity checks used to be simple how many attempts in how much time but modern velocity analysis is far more advanced. Predictive models track how behaviour changes across sessions, devices, accounts and even merchant clusters. Sudden spikes in activity, inconsistent navigation patterns, or abnormal attempt sequences often reveal orchestrated fraud operations rather than genuine customers.

For example, a user who browses normally but unexpectedly switches into high-speed form-filling is likely automation-assisted. Likewise, a device that appears across multiple unrelated merchant sites within minutes may signal credential stuffing or synthetic identity testing. These anomalies feed into the model’s understanding of abnormal intent.

BIN, Issuer & Token Lifecycle Irregularities

Issuer behaviour has become one of the strongest external indicators of fraud risk. Predictive models monitor how specific issuers react to transactions: tightening rules, issuing soft declines, or frequently updating authentication requirements. When an issuer shows early hesitation, it often correlates with elevated downstream fraud probability.

Similarly, token lifecycle signals token ageing patterns, cryptogram rotation anomalies, or mismatches between stored and live token states reveal potential account compromise, device swapping or credential uncertainty. In a predictive model, these metadata-level insights strengthen the overall risk signal even before the payment reaches the issuer.

High-Risk Vertical Use Cases: How Predictive Models Transform Performance

Predictive risk analytics deliver the greatest value in high-risk vertical industries where fraud evolves quickly, identities are fluid, user intent is ambiguous, and transactional behaviour often mimics legitimate activity until the final moments of checkout. In these environments, traditional verification methods fall short because fraud actors know how to avoid simple flags. What separates successful merchants in 2026 is their ability to interpret behavioural, device and network-level indicators before the user even initiates payment.

Predictive models provide high-risk merchants with something they’ve historically lacked: early visibility into user intent. Instead of waiting for suspicious card behaviour, mismatched IPs or unusual transaction amounts, merchants can detect high-risk patterns during browsing, sign-up and form engagement. This pre-emptive approach not only reduces fraud but also prevents legitimate users from being subjected to unnecessary friction. The result is a dual advantage, lower fraud losses and higher approval rates.

Below are real-world scenarios illustrating how predictive models reshape fraud prevention within high-risk verticals.

- iGaming: Identifying Synthetic Players & Bonus Abuse Before Registration Completes

The iGaming ecosystem attracts sophisticated fraudsters who specialise in creating synthetic player profiles for bonus exploitation, chip dumping, multi-accounting and card testing. Traditional risk engines typically catch these behaviours only after the account is created or after a payment attempt.

Predictive models shift detection much earlier. Behavioural biometrics identify atypical navigation flows players who instantly move to promotional pages or repeat identical sequences across multiple devices. Device graphs reveal clusters of accounts linked to shared fingerprints or recycled mobile IDs. Payment graphs correlate cards or wallets used repeatedly across multiple unrelated accounts, highlighting organised fraud rings.

By spotting these patterns before registration is finalised, merchants prevent fraudulent users from entering the ecosystem entirely, reducing AML exposure, bonus abuse and subsequent chargebacks.

- Forex/CFD: Predicting Proof-of-Funds Legitimacy Using Behavioural Intent Signals

Forex, CFD and trading platforms face unique challenges because fraudulent actors often attempt to pass KYC checks using stolen or synthetic identities, then deposit funds from compromised payment instruments. Predictive risk models analyse the user’s engagement with onboarding forms, document-upload behaviour, mouse-flow irregularities and attempt velocity to identify whether the customer intends to meet future obligations or exit quickly after one fraudulent transaction.

Importantly, predictive systems can identify early-stage proof-of-funds manipulation. If the user repeatedly toggles between funding methods, uses inconsistent device profiles or demonstrates automated document-upload patterns, the system can restrict access before any financial activity occurs. This protects merchants from high-value fraud events that are difficult to recover post-settlement.

- Adult & Dating: Preventing Privacy-Masked Fraud Without Adding Friction

Adult and dating merchants operate in a context where legitimate customers often use privacy tools, VPNs, private browsers, and temporary emails not because of malicious intent but because of the sensitive nature of the content. This makes fraud detection uniquely complex: merchants must distinguish between genuine privacy-conscious users and actors using obfuscation to mask fraud.

Predictive models excel in this nuance. Behavioural signals reveal natural user movement, while fraud actors often show mechanical or overly efficient patterns. Device graphs identify whether the device has previously been associated with successful, dispute-free sessions even if the location or IP changes. Payment graphs detect whether the same token or BIN has a trustworthy history.

This approach allows merchants to approve legitimate privacy-seeking users while stopping malicious actors without intrusive verification flows.

- Travel & Ticketing: Stopping Fake Bookings & Reseller Fraud Before Search-to-Pay Flow

Travel merchants face constant attacks from fraud rings exploiting refundable ticketing, automated seat scraping, and large-volume last-minute booking fraud. Traditional systems detect these attempts only when the user enters payment details often too late.

Predictive risk analytics start much earlier in the journey. Abnormal search-to-booking ratios, robotic navigation speed, repeated route probing, and device identity overlaps expose fraudulent intent long before checkout. Payment graphs detect instruments linked to mule accounts or previous high-risk travel corridors.

By blocking or challenging high-risk users during search or before ticket confirmation, merchants prevent fake inventory holds, reduce operational strain and protect payment performance for genuine travellers.

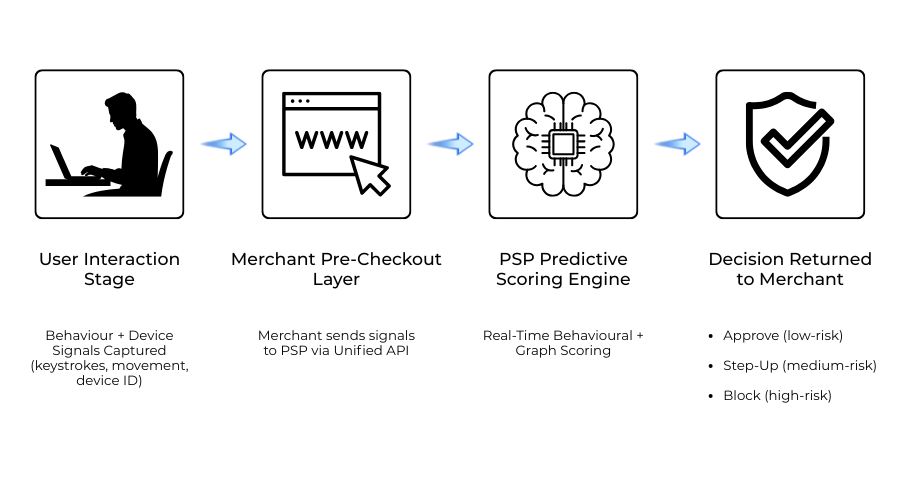

The PSP Integration Layer: How Predictive Models Plug Into Merchant Systems

Predictive risk analytics are only effective if they integrate seamlessly into the merchant’s existing payment and onboarding flows. In 2026, PSPs no longer provide fraud tools as isolated add-ons; they act as real-time intelligence layers, feeding behavioural, device and payment-graph scores into the merchant’s internal logic long before checkout. This integration determines whether a merchant can operationalise predictive insights or whether they remain theoretical gains trapped inside fragmented systems.

Modern PSPs deliver predictive risk capabilities through unified APIs that collect, score and return risk evaluations at multiple stages of the customer journey.

Instead of relying solely on the checkout page for fraud detection, merchants receive detailed risk signals during browsing, form interactions, account creation, deposit attempts, and pre-payment checks. This early-stage visibility transforms how merchants design onboarding flows, evaluate user trust, and decide when to route, challenge or block a transaction.

The integration layer also ensures that risk scoring remains a continuous, adaptive process rather than a fixed evaluation. As fraud patterns evolve, PSP models update in real time, with new model outputs instantly reflected in merchant decisioning logic. This reduces the operational burden on merchants, who no longer need to redesign rule sets every time a fraud tactic shifts. Instead, the PSP’s predictive engine becomes a constantly learning component of the merchant’s fraud stack.

Unified APIs That Deliver Pre-Checkout Scores

In previous years, risk scoring only occurred once the user attempted a payment. In 2026, PSPs now offer pre-checkout scoring endpoints that return behavioural and device-risk scores as soon as a user exhibits meaningful interaction patterns.

This enables merchants to:

- Suppress high-risk users before they reach the payment page

- Apply targeted friction (such as step-up verification) only where necessary

- Dynamically modify checkout options based on the predicted likelihood of fraud

For example, if the risk score indicates synthetic identity behaviour, the merchant may require additional verification or restrict high-risk payment methods. Conversely, low-risk users may receive a streamlined checkout experience with fewer authentication challenges.

Real-Time Feedback Loops From Declines, Approvals & Rule Triggers

A critical advantage of PSP-driven predictive models is the feedback loop. Every approval, decline, issuer reaction, refund, dispute or fraud incident becomes a training input that sharpens model accuracy. Merchants benefit because the system evolves automatically rather than requiring manual configuration.

When issuers begin tightening fraud controls, or when a new fraud ring emerges across multiple merchants, PSPs can detect the pattern immediately through cross-merchant intelligence. That insight flows back to every merchant integrated into the system, offering protection even before the fraud actor reaches their website.

Real-time feedback loops also reduce operational noise. Instead of merchants reacting to isolated fraud cases, the PSP’s predictive model adapts continuously, ensuring that risk scoring reflects live ecosystem conditions, not outdated rule sets.

Compliance, Privacy & Governance Requirements for Predictive Models

As predictive risk analytics become more sophisticated, regulators are paying closer attention to how behavioural, device and network-level signals are collected, processed and interpreted. In 2026, merchants cannot adopt advanced fraud models without also addressing the compliance frameworks that govern them. Predictive analytics operate at the intersection of data protection, consumer rights, AI governance and financial regulation meaning merchants must build systems that are both technically effective and legally defensible.

The shift toward behavioural and device-level profiling has raised important questions around consent, transparency and proportionality. Regulators are increasingly clear: fraud prevention is a legitimate interest, but it must be implemented in a way that respects user privacy, ensures fairness, and avoids excessive or opaque data processing. PSPs and merchants who use predictive models must therefore document, justify and audit how their algorithms work and what data they rely on.

This compliance layer is not a barrier but a structural requirement for operating in high-risk and high-volume environments. Predictive models that fail to meet governance expectations are not simply risky; they may become regulatory liabilities. Below are the most critical regulatory themes shaping predictive risk analytics in 2026.

GDPR, Data Minimisation & Cross-Border Data Transfers

GDPR remains the foundational framework for behavioural and device analytics across Europe and increasingly influences global standards. Under GDPR, fraud prevention qualifies as a “legitimate interest,” but merchants must demonstrate that the data they collect is necessary, proportionate, and minimally invasive.

Behavioural biometrics, for instance, are generally permitted, but only when collected passively and used strictly for fraud detection. Merchants must avoid gathering unnecessary raw behavioural data when aggregated or derived signals would suffice. Cross-border transfers further complicate matters, especially when data is processed by PSPs or vendors outside the EU. Model training datasets must comply with transfer safeguards such as SCCs or approved frameworks.

Merchants who implement predictive analytics without a clear minimisation rationale may expose themselves to enforcement actions or customer complaints, even if the underlying fraud prevention objectives are valid.

PSD3 Requirements for Data Transparency & Risk-Based Authentication

The transition from PSD2 to PSD3 increases expectations around how payment service providers handle behavioural and device-level risk assessments. PSD3 emphasises transparency: users must be informed when their data contributes to fraud detection, and PSPs must explain how these signals support risk-based authentication decisions.

This does not mean exposing algorithmic logic but requires high-level clarity about which categories of data influence fraud decisions. PSPs must also ensure that predictive models do not create discriminatory outcomes for example, penalising users based solely on geography or device type without legitimate risk justification.

PSD3 also strengthens rules around step-up authentication. Predictive models must justify when a transaction is allowed to bypass SCA or when additional verification is required. The decision must be explainable and auditable.

Explainability Standards for AML & AI Fairness

As predictive models increasingly influence onboarding and transaction approval pathways, regulators now expect a degree of explainability, especially in high-risk sectors subject to AML oversight. Risk decisions cannot be black boxes. Merchants must be able to provide:

- Documented reasoning for why the model flagged a user

- A traceable data lineage for the signals used

- Evidence that the decision aligns with anti-fraud and AML objectives

This is particularly important when predictive models result in onboarding rejection, account limitation or payment denial. Regulators require merchants to ensure that these decisions are free of unlawful bias and based on legitimate, verifiable risk factors.

AI fairness frameworks are also expanding. Models must undergo periodic audits to detect drift, discrimination and false-positive inflation. High-risk merchants benefitting from predictive analytics must therefore invest in operational governance, ensuring that fast-moving AI systems remain controlled, transparent and compliant.

KPIs to Measure Predictive Model Performance

Predictive risk models can dramatically improve fraud prevention, approval rates and customer experience but only when merchants monitor the right performance indicators. In 2026, evaluating a predictive system requires more nuance than simply checking whether fraud declines or approvals rise. Modern models influence every stage of the customer journey, from initial browsing to final payment, meaning the KPIs must capture not only fraud reduction but also conversion, false positives, friction levels and risk-model stability.

A predictive model should demonstrate tangible value by improving outcomes before checkout begins. If a merchant’s fraud losses drop but legitimate users experience friction or abandonment increases, the model is misaligned. Conversely, if approvals increase because risky users are filtered upstream, merchants must ensure that the model’s decisions are explainable and consistent. The goal is not just to block fraud more effectively it is to build a risk engine that improves revenue while reducing operational strain.

Below are the KPIs that matter most when assessing predictive model performance in high-risk environments.

- Pre-Checkout Fraud Interception Rate

One of the most meaningful indicators of success is how effectively the model identifies fraudulent intent before payment details are entered. This metric measures the percentage of fraud prevented during browsing, form interaction or early onboarding steps.

A high interception rate demonstrates that the model is proactively neutralising risk and reducing the number of fraudulent users who ever reach the checkout page. This, in turn, lowers issuer scrutiny, decreases chargebacks and protects approval performance for legitimate customers.

- Approval Rate Uplift for Legitimate Users

A predictive model is valuable not only when it blocks fraud but when it improves transaction success for good users. By filtering out high-risk traffic early, merchants reduce issuer suspicion and authentication friction. Approval uplift becomes a direct byproduct of smarter upstream decisioning.

This KPI is especially critical for high-risk verticals, where legitimate customers often face unnecessary declines because fraud-heavy traffic skews issuer risk models.

- Reduction in False Positives

False positives incorrectly blocking or challenging legitimate customers can quietly destroy revenue. Predictive models should significantly reduce false positives by using behavioural and device-based indicators to distinguish genuine users from scripted behaviour.

This metric requires ongoing monitoring because false positives tend to increase when models drift or when fraud patterns evolve faster than the training dataset.

- Model Drift Stability

Predictive systems naturally degrade over time as fraud tactics evolve. “Model drift” occurs when the model’s predictions become less accurate because its training data no longer reflects real-world patterns.

Monitoring drift ensures that merchants:

- Refresh training datasets

- Recalibrate thresholds

- Review high-risk signals

- Validate fairness and explainability

A stable model adapts continuously without degrading performance or increasing noise.

- Downstream Fraud Reduction (Post-Checkout)

Even with strong pre-checkout detection, merchants must measure how many fraudulent attempts slip through and appear as chargebacks, friendly fraud cases or refund disputes. This KPI validates that upstream predictive controls are aligned with downstream realities.

When predictive models work correctly, downstream fraud should decline without increasing friction for legitimate users.

Conclusion

By 2026, predictive risk analytics have fundamentally reshaped how merchants, PSPs and issuers understand fraud. Instead of relying on filters that react after a suspicious payment attempt, the most effective merchants now use behavioural, device and payment-graph intelligence to anticipate fraud far earlier in the journey. This shift from reactive defence to proactive detection is not simply a technological upgrade it is a structural evolution in how digital commerce operates.

Predictive models give merchants something they have historically lacked: genuine insight into user intent before a transaction ever occurs. When combined with device lineage, cluster analysis and issuer-side patterns, risk scoring becomes dramatically more accurate and significantly less disruptive to legitimate customers. The result is a payments ecosystem where fraud is intercepted before checkout, good customers face fewer authentication hurdles, and PSPs can route traffic with greater confidence.

For high-risk merchants especially, predictive analytics are no longer optional. Industries like gaming, forex, travel and adults operate in environments where fraud morphs continuously and traditional rules engines cannot keep pace. Predictive systems expose synthetic identities before registration, block fraudulent browsing sessions before deposit attempts, and learn from issuer health signals before declines accumulate. These capabilities do more than reduce fraud; they protect revenue, improve approval rates and stabilise long-term acquiring relationships.

The merchants who succeed in 2026 will be those who treat predictive risk not as a plug-in tool, but as an integrated part of their operating model. When predictive analytics become part of onboarding flows, UX design, routing logic and compliance governance, fraud no longer shapes the business the business shapes its fraud posture. And in a world where margins shrink and attack surfaces expand, that level of control becomes a competitive advantage no merchant can afford to ignore.

FAQs

1. What makes predictive risk analytics different from traditional fraud filters?

Traditional filters react after a payment attempt by checking static rules or velocity counters. Predictive analytics analyse behavioural, device and network-level patterns before checkout, allowing merchants to identify malicious intent early and prevent fraud proactively.

2. What types of behavioural data are used in predictive fraud models?

Predictive models assess keystroke rhythms, mouse movements, scroll behaviour, touch-pressure variations, navigation patterns, and session timing. These micro-interactions help determine whether the user behaves naturally or exhibits automation or scripted behaviour associated with fraud.

3. Are behavioural biometrics compliant with GDPR and PSD3?

Yes, When implemented correctly. GDPR permits behavioural biometrics for fraud prevention under legitimate interest, but requires data minimisation and transparency. PSD3 adds expectations around explainability and fairness, ensuring the signals used are necessary, proportionate and non-discriminatory.

4. How do device graphs help prevent fraud?

Device graphs map relationships between devices, users, IPs, cards and past risk outcomes. Even if a device appears “clean,” graph connections may reveal hidden links to fraud clusters, synthetic identities or bot networks, enabling earlier and more accurate intervention.

5. What is a payment graph, and why is it important?

A payment graph is a dynamic network showing how cards, tokens, emails, devices and users interact across transactions. Fraud rarely occurs in isolation payment-graph ML identifies clusters and hidden correlations that simple risk rules cannot detect.

6. Can predictive models reduce false positives?

Yes. Predictive analytics distinguishes between high-risk anomalies and legitimate but unusual behaviour. By analysing intent rather than isolated datapoints, these models significantly reduce the number of genuine customers incorrectly flagged as fraudulent.

7. How early in the user journey can predictive scoring take place?

Modern PSP integrations allow scoring during browsing, account creation, form entry and pre-checkout interactions. This helps merchants block malicious users long before they attempt a payment.

8. Do predictive models work for high-risk verticals like gaming, forex and travel?

Absolutely. These industries benefit the most because they attract synthetic identities, bots, bonus abusers and high-value fraud attempts. Predictive models catch patterns earlier than traditional systems, improving both fraud prevention and approval rates.

9. How do PSPs integrate predictive risk analytics into merchant systems?

PSPs provide unified APIs that deliver risk scores in real time. These APIs feed behavioural and device intelligence into the merchant’s onboarding, checkout, routing and authentication flows, enabling faster decisions and continuous model optimisation.

10. Does using predictive analytics affect issuer approval rates?

Yes, positively. By filtering high-risk traffic upstream, merchants send issuers “cleaner” traffic. Issuers respond by granting more approvals, reducing soft declines and improving authentication performance.

11. Can predictive models detect bots and automation tools?

Predictive systems identify automation through tell-tale signs such as mechanical cursor movement, repetitive timing cycles, unnatural form completion speed, and device fingerprints common to known bot networks. These signals are detected long before checkout.

12. What KPIs should merchants track to measure model performance?

Key KPIs include pre-checkout fraud interception rate, approval rate uplift, false-positive reduction, downstream fraud decline, and model drift stability. These metrics demonstrate the financial and operational impact of predictive analytics.