By 2026, fraud no longer waits for scale. It manufactures it. The most damaging fraud patterns facing digital merchants today do not begin with stolen cards or suspicious transactions. They begin earlier, quietly, and at industrial speed inside automated onboarding flows that were designed for growth, not resistance. Bots create accounts, bypass lightweight verification, attach payment methods, and monetise within hours. By the time a transaction looks suspicious, the fraud has already paid for itself.

This shift matters because it inverts traditional fraud economics. Fraud is no longer something that happens to a real customer. It is something engineered from the first API call. Fake accounts are not noise at the edge of the funnel; they are the funnel.

What makes this wave difficult to contain is not just volume, but intent. These are not random sign-ups hoping to slip through. They are purpose-built identities, assembled from recycled credentials, synthetic data, and automated behaviour designed to look just real enough to pass early controls. Payment methods are attached immediately, promotions are harvested aggressively, and payouts are triggered before human review ever becomes economical.

For merchants, this creates a dangerous blind spot. Onboarding teams optimise for conversion. Payments teams focus on transaction risk. Fraud teams are asked to intervene after losses appear. “Fraud-funded onboarding” thrives in the gaps between those functions.

Stopping it requires a different mindset. The objective is no longer to detect bad transactions. It is to prevent fake users from ever becoming economically viable.

- How bot-driven onboarding works

- Framework: onboarding risk score, first-transaction risk score, payout risk score

- Controls: device fingerprinting, behavioural biometrics, velocity and identity lineage

- Progressive limits: caps that relax only after trust is earned

- Operational playbook: automated clustering, case routing, and suppression rules

- KPIs: measuring whether onboarding is becoming safer or just quieter

- Conclusion stop fraud before it becomes a payment event

- FAQs

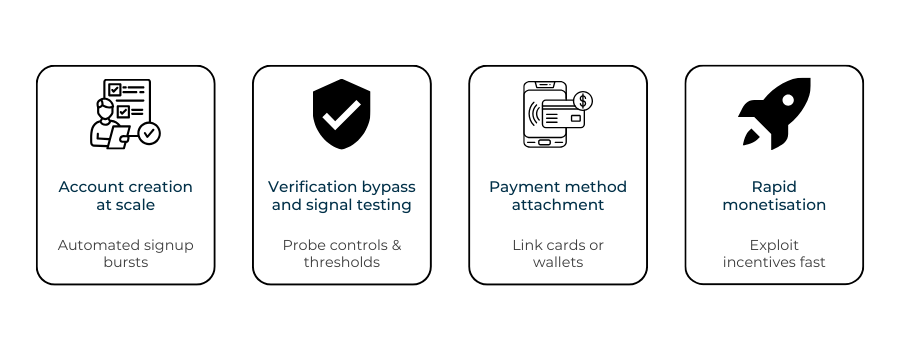

How bot-driven onboarding works

Bot-driven onboarding is not a single trick. It is a production line. Each stage is optimised, tested, and iterated until the economics work. Understanding the flow matters because controls that succeed at one step often fail at the next.

Account creation at machine speed

The process begins with scale. Automated scripts create thousands of accounts in parallel, rotating devices, IP ranges, and behavioural signatures. These are not crude form-fillers. Modern bot frameworks simulate hesitation, correction, and variation in input timing. They are trained to look like distracted humans, not like software.

What makes this stage effective is that most onboarding systems still treat account creation as low risk. Email or phone verification is assumed to be sufficient friction. In reality, these checks are commoditised. Disposable inboxes, SMS farms, and pre-warmed phone numbers allow bots to pass with near-perfect success rates.

The objective at this stage is not longevity. It is optional. The bot operator only needs a percentage of accounts to survive long enough to move forward.

Verification bypass without breaking rules

The next phase is not about hacking verification. It is about exploiting its assumptions.

Lightweight identity checks, step-up verification triggers, and document uploads are often designed around honest failure, not adversarial optimization. Bots learn which combinations of data trigger deeper review and which quietly pass. They abandon accounts that encounter friction and double down on those that do not.

This is where synthetic identity fragments become powerful. Reused names, recycled addresses, breached credentials, and plausible-but-fake demographic details combine to create profiles that satisfy surface-level checks without establishing a real-world anchor.

From the system’s perspective, nothing looks obviously wrong. From the attacker’s perspective, this is signal discovery.

Payment method attachment as the real test

For attackers, attaching a payment method is the moment of truth. It determines whether the account can generate value.

Stolen cards, mule-controlled accounts, and compromised wallets are introduced at this stage not randomly, but selectively. Bots test which payment types pass without friction, which trigger step-ups, and which allow immediate spending or withdrawal.

Merchants often underestimate how much information is leaked here. Decline codes, verification prompts, and timing responses all feed back into the bot’s decision logic. Over time, the system learns which paths lead to monetisation and which should be avoided.

Monetisation before detection

Once a viable path is found, speed becomes the priority.

Promotions are redeemed. Free trials are converted. Digital goods are resold. Payouts are triggered. The account may only live for hours, but that is enough. Losses are spread thinly across many accounts, keeping any single signal below alert thresholds.

By the time fraud surfaces in transaction monitoring dashboards, the onboarding funnel has already done its job.

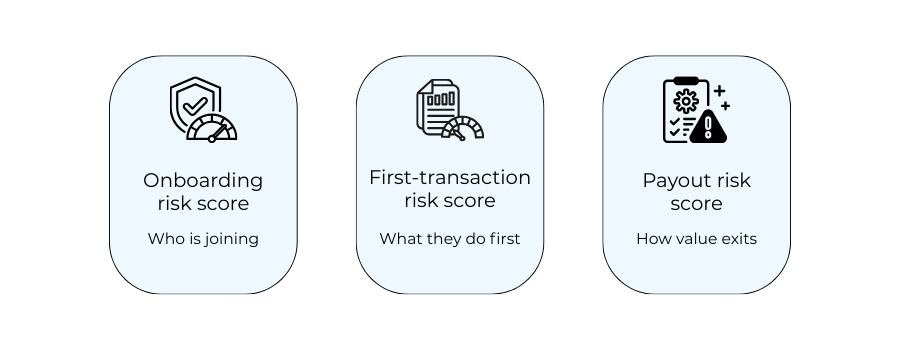

Framework: onboarding risk score, first-transaction risk score, payout risk score

Stopping fraud-funded onboarding requires abandoning the idea of a single, decisive checkpoint. No one score can capture intent that unfolds over time. What works in practice is a layered view of risk that evolves as the account moves from creation to monetisation.

The framework below reflects how effective teams separate who is joining, what they do first, and how value exits the system. Each layer answers a different question, and none of them is sufficient on its own.

Three scores, three decisions

| Risk layer | What it measures | Decision it informs |

| Onboarding risk score | Likelihood the account is automated or synthetic at creation | How much friction or access the account receives immediately |

| First-transaction risk score | Probability the initial activity is abusive or probing | Whether activity is allowed, limited, or delayed |

| Payout risk score | Risk that value is being extracted or laundered | Whether funds can exit, and how fast |

This separation is subtle but important. Many merchants collapse these into a single fraud score and then wonder why losses still leak through. The attacker is adapting at each stage; the controls must do the same.

Onboarding risk score: intent without history

At onboarding, there is no transaction history to lean on. The score is therefore predictive rather than evidentiary. It estimates how likely an account is to be fake based on signals that exist before value is created.

This score should influence access, not outcomes. High risk does not automatically mean rejection. It means tighter limits, delayed privileges, or additional verification before the account becomes economically useful.

Critically, this score should decay over time if behaviour proves legitimate. Permanent suspicion creates unnecessary friction for real users.

First-transaction risk score: testing behaviour

The first meaningful action taken by an account is rarely about revenue. It is about discovery.

Attackers use early transactions to probe controls, pricing, and response logic. Small amounts, unusual combinations, and rapid retries are not random; they are experiments. The first-transaction risk score is designed to recognise that pattern.

This is where many losses can be prevented cheaply. A delayed fulfilment, a temporary cap, or a silent review at this stage often costs far less than a chargeback or payout investigation later.

Payout risk score: when money leaves

Payouts change everything. Once value exits the platform, recovery becomes expensive and sometimes impossible. The payout risk score therefore needs to be the strictest layer, even for accounts that looked benign earlier.

Importantly, this score should incorporate lineage. An account that inherits risk from connected users, devices, or payment methods should not be treated as new simply because it behaved well initially.

Why this framework works

What makes this model effective is not sophistication, it is timing. Each score is applied when its signals are strongest and its decision is cheapest to reverse. Together, they shift fraud control earlier in the lifecycle, where fake accounts are still unprofitable.

Merchants who implement this framework stop asking whether an account is “fraud” or “not fraud.” Instead, they ask a more useful question at each stage: how much trust has this account earned so far?

Controls: device fingerprinting, behavioural biometrics, velocity and identity lineage

Controls only fail when they are treated as standalone defences. In fraud-funded onboarding, no single signal is decisive. What matters is how signals compound over time and how quickly they narrow an attacker’s room to operate.

This section is intentionally practical. Not a catalogue of tools, but an explanation of how control layers work together when fake accounts are created to monetise fast.

Device fingerprinting: reducing repeat attempts, not proving identity

Device fingerprinting is often misunderstood as an identification mechanism. In reality, its value lies elsewhere.

In automated onboarding attacks, the same underlying infrastructure is reused aggressively. Even when bots rotate IPs, credentials, and behavioural patterns, they struggle to fully escape device-level continuity. Fingerprinting creates memory where attackers want amnesia.

Used correctly, it does not block users outright. It quietly:

- Increases friction on repeat attempts

- Degrades success rates over time

- Forces attackers to spend more per account

That cost pressure is critical. Fraud-funded onboarding collapses when the economics stop working.

Behavioural biometrics: intent reveals itself in motion

Where device signals anchor where activity comes from, behavioural signals explain how it happens.

Bots are optimised to pass static checks. They are far less consistent under dynamic observation. Mouse movement, touch pressure, scroll cadence, hesitation, correction patterns are not about catching perfection, but about spotting optimization.

Human behaviour is inefficient. Bots aim to be just efficient enough.

Behavioural biometrics are most effective early in the journey, before accounts have accumulated history. They provide probabilistic insight into intent at the moment it matters most: before value is created.

Velocity controls: attackers move faster than real users

Velocity remains one of the most underrated controls in onboarding fraud, largely because it looks unsophisticated.

Bots compress time. They create, verify, attach payment methods, and transact at speeds that real users rarely match. Even when individual actions appear normal, the sequence does not.

Velocity checks should not be binary. The objective is not to stop fast users, but to recognise when too many correct actions happen too quickly.

This is especially powerful when applied across connected entities rather than single accounts.

Identity lineage: risk does not reset at account creation

Identity lineage is what stops fraud from being treated as a series of unrelated events.

Fake accounts are rarely isolated. They share devices, networks, credentials, payment methods, behavioural patterns, or payout destinations. Lineage analysis connects these fragments into something more actionable: inherited risk.

This is where many merchants fall short. They evaluate each account as if it were new, even when it is clearly downstream of known abuse. Lineage allows controls to escalate without waiting for fresh losses.

Why layering matters more than precision

None of these controls are perfect. That is not the point. Each layer introduces friction, uncertainty, and cost for the attacker. Together, they shorten the window in which a fake account can become profitable. Precision improves over time. Loss prevention improves immediately.

The goal is not to catch every bot. It is to make automated onboarding unviable at scale.

Progressive limits: caps that relax only after trust is earned

Progressive limits work because they change the economics of fraud without announcing themselves. Instead of trying to identify bad actors perfectly at the point of entry, they assume uncertainty and price that uncertainty in.

This is a structural shift. Access is no longer binary. It is conditional, incremental, and earned.

Why early freedom is the real vulnerability

Fraud-funded onboarding thrives on immediacy. Fake accounts are designed to extract value before scrutiny becomes worthwhile. When new users receive full functionality on day one, attackers do not need to evade controls for long. They only need to be fast.

Progressive limits invert that advantage. They accept new users quickly, but they do not trust them quickly.

At the start of an account’s life, limits are deliberately tight. Spending caps are low. Promotions are constrained. Payouts are delayed or throttled. None of this blocks legitimate users outright, but it sharply reduces how much a fake account can monetise in its most active window.

Trust is demonstrated, not declared

The most effective progressive limit systems do not rely on static milestones like “account age” alone. Time matters, but behaviour matters more.

Trust is accumulated through signals that are difficult to fake cheaply:

- Consistent usage patterns

- Stable devices and environments

- Successful payments without retries

- Absence of connected abuse signals

As these signals accrue, limits relax gradually. Access expands because risk has demonstrably fallen, not because the account has survived long enough.

Limits that attackers cannot easily map

A common mistake is making progressive limits too transparent. When thresholds are obvious, bots simply optimise around them.

Effective systems introduce variability. Limits may relax at slightly different rates. Some privileges may unlock before others. Certain actions may remain capped until multiple conditions are met.

From the attacker’s perspective, this creates uncertainty. They cannot reliably predict when an account becomes valuable, which makes scaling automation harder and more expensive.

Why legitimate users tolerate this model

There is often concern that progressive limits will harm conversion. In practice, the opposite is usually true.

Real users rarely need full functionality immediately. They explore, test, and build confidence over time. Progressive limits align with that natural behaviour. When designed well, they feel like sensible guardrails rather than restrictions.

Crucially, this approach also reduces false positives. Instead of blocking uncertain users, merchants observe them safely.

Progressive limits as a fraud control, not a policy

The most important mindset shift is this: progressive limits are not a customer policy. They are a fraud control.

They exist to ensure that trust is proportional to exposure. When exposure grows faster than trust, losses follow. When trust gates are exposed, fraud-funded onboarding loses its speed advantage.

Operational playbook: automated clustering, case routing, and suppression rules

Once fraud-funded onboarding reaches scale, manual review becomes a bottleneck rather than a defence. The accounts are cheap, the behaviour is fast, and the losses are deliberately fragmented. The only way to keep pace is to stop treating fake accounts as individual cases and start treating them as systems. This is where operations matter more than tools.

Automated clustering: see campaigns, not accounts

Clustering is the shift from account-level analysis to pattern-level control.

Instead of asking whether a single user is fraudulent, effective teams ask whether a group of users behaves as if it were created by the same process. Shared devices, similar behavioural signatures, reused payment methods, repeated promo paths, and common payout destinations all point to coordination.

Automated clustering allows merchants to:

- Identify campaigns early

- Act on weak signals before losses concentrate

- Suppress entire clusters instead of chasing accounts

The value here is speed. Clusters emerge long before any single account generates enough loss to justify investigation.

Case routing: human review only where it adds value

Not every alert deserves a human. One of the most expensive mistakes merchants make is routing all uncertainty to manual review. In fraud-funded onboarding, that approach collapses quickly. The volume is too high, and the accounts are too disposable.

Effective routing logic distinguishes between:

- Cases that require judgement

- Cases that require confirmation

- Cases that require suppression

Human analysts should be reserved for ambiguity edge cases where signals conflict or where legitimate users may be caught in broader controls. Everything else should resolve automatically, even if the outcome is conservative. This is how teams protect analyst time while still acting decisively.

Suppression rules: stop repeat abuse without investigation

Suppression rules are not about attribution. They are about prevention. When a device, payment method, behavioural pattern, or payout destination has been linked to abuse, it should not be allowed to participate freely again. Suppression rules quietly enforce that memory.

These rules tend to be blunt by design:

- Known bad infrastructure is throttled or blocked

- Reused assets inherit risk immediately

- Previously suppressed patterns trigger limits by default

The goal is not to prove intent. It is to deny scalability.

Why this playbook works under pressure

What ties clustering, routing, and suppression together is a shared assumption: speed beats certainty.

Fraud-funded onboarding exploits hesitation. It thrives when teams wait for confirmation, consensus, or perfect evidence. This playbook accepts that some decisions will be made with incomplete information and designs controls that make those decisions reversible.

Accounts can be reinstated. Limits can be relaxed. Promotions can be reissued. Lost time, however, cannot be recovered.

The operational shift that matters most

The real transition is cultural. Teams that succeed stop asking “Is this account fake?”

They start asking “Is this pattern safe to allow again?”

That shift is what turns onboarding from a liability back into a controlled gateway.

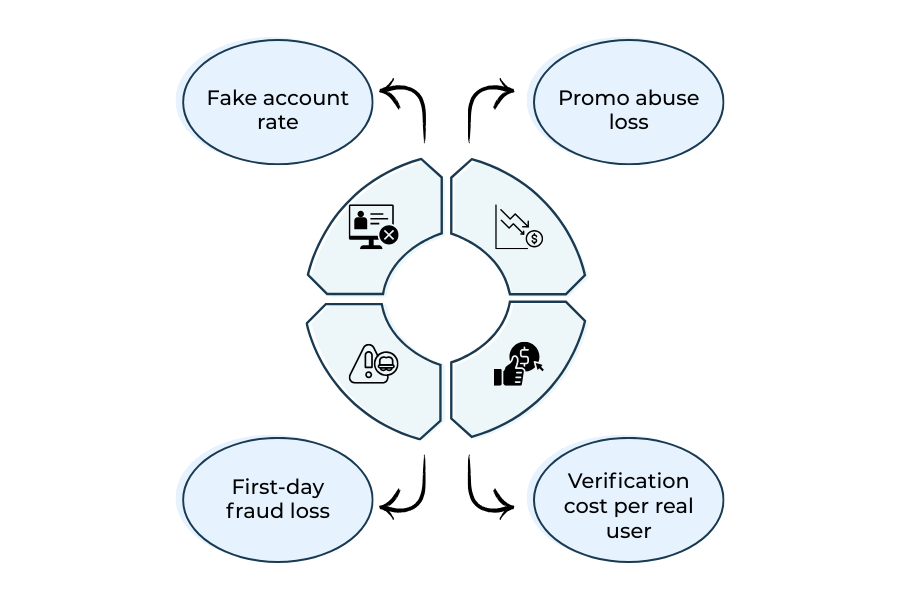

KPIs: measuring whether onboarding is becoming safer or just quieter

Most teams track fraud KPIs as lagging indicators. Loss appears, a number moves, a fix is deployed. In fraud-funded onboarding, that sequence is too slow. By the time a metric confirms a problem, the attackers have already iterated.

What works better is to treat KPIs as behavioural sensors rather than scorecards. The goal is not to prove fraud exists. It is to detect whether fake accounts are becoming economically unviable.

Fake account rate is a health signal, not a target

Fake account rate is often treated as a vanity metric: lower is better, zero is ideal. In reality, a falling fake account rate can mean two very different things.

It may indicate that controls are working. Or it may indicate that detection thresholds are too high and abuse is slipping through quietly.

The useful insight comes from movement, not absolute values. Sudden drops or spikes usually signal a shift in attacker behaviour or a blind spot introduced by a control change. Teams that watch this metric in isolation miss that context.

Promo abuse loss reveals where controls are weakest

Promotional loss is one of the earliest indicators that onboarding is being exploited. Unlike chargebacks or disputes, it surfaces before payment systems push back.

When promo abuse loss rises while payment fraud remains flat, it is often a sign that attackers are still probing learning where limits sit before escalating. When it falls sharply without a corresponding drop in sign-ups, it can indicate that fake accounts are being filtered earlier.

This metric is less about the absolute amount lost and more about how cheaply attackers can test the system.

First-day fraud loss tells you who controls time

First-day fraud loss compresses several risk questions into one number.

It answers:

- How quickly can a new account monetise?

- How much exposure exists before trust is earned?

- How effective are early-stage controls?

If a meaningful portion of fraud loss occurs on day one, it is rarely a transaction problem. It is an onboarding design problem. Progressive limits, delayed fulfilment, and early friction should push this number down even if total fraud volume remains unchanged.

Verification cost per real user keeps teams honest

This is the KPI most often ignored and the one that prevents overcorrection.

As controls tighten, verification costs inevitably rise. The question is whether that cost is being spent on real users or fake ones. Tracking verification cost per retained or revenue-generating user forces teams to confront trade-offs explicitly.

If verification costs rise while the fake account rate stays flat, friction is being applied in the wrong places. If costs rise and fake accounts become unprofitable, the system is working as intended.

The KPI pattern that matters

Individually, these metrics can mislead. Together, they tell a more useful story.

When fake accounts become expensive to create, slow to monetise, and costly to scale, attackers move on even if some abuse still exists. That is the real objective.

Effective teams do not aim for zero fraud. They aim for unattractive fraud.

Conclusion stop fraud before it becomes a payment event

Fraud-funded onboarding succeeds because it exploits a structural gap. Most defences are designed to protect transactions, not identities. By the time money moves, the attacker has already won the most important battle: turning a fake user into a trusted one, even briefly.

What this analysis shows is that the problem is not a lack of tools. It is a timing problem. Controls applied after the first transaction are expensive and reactive. Controls applied during onboarding are cheaper, quieter, and far more effective if they are designed to manage uncertainty rather than eliminate it.

The merchants that contain this threat do not rely on single checkpoints or perfect verification. They layer risk over time. They assume early-stage users are unproven. They cap exposure, observe behaviour, and allow trust to accumulate only where it is earned. In doing so, they remove the speed advantage that automation depends on.

This also reframes the role of payments. Payments are not the point of failure; they are the point of consequence. When fraud reaches the payment layer, losses become visible, disputes escalate, and regulatory exposure increases. The objective is not better payment fraud detection. There are fewer fraudulent accounts ever reaching a payment event.

By 2026, fake accounts that pay are no longer an anomaly. They are an operating model. Merchants that continue to treat onboarding as a growth-only function will keep funding it. Those that treat onboarding as a risk surface will starve it. The difference is not technological sophistication.

It is where trust is allowed to form.

FAQs

1. What is “fraud-funded onboarding”?

Fraud-funded onboarding refers to schemes where fake or automated accounts are created specifically to monetise onboarding flows. Instead of waiting for organic growth, attackers manufacture users, attach payment methods, exploit promotions or trials, and extract value before controls or reviews intervene. The onboarding funnel itself becomes the funding mechanism for fraud.

2. Why is bot-driven onboarding harder to stop than traditional fraud?

Traditional fraud often relies on stolen credentials or payment data interacting with real user accounts. Bot-driven onboarding flips this model. The account itself is fake from the start and designed to be disposable. This makes transaction-level controls less effective, because the fraud is engineered to complete before behavioural history or chargeback signals accumulate.

3. Are CAPTCHAs and basic verification still effective against bots?

They provide limited value on their own. Modern bots are optimised to bypass or outsource basic challenges, including CAPTCHAs, email verification, and SMS codes. These controls may slow down unsophisticated attacks, but they are insufficient against coordinated, automated onboarding campaigns unless layered with behavioural and lineage-based controls.

4. How quickly do fake accounts usually monetise?

In many cases, within hours. Automated accounts are built to attach payment methods, redeem incentives, or trigger payouts as quickly as possible. If an account cannot become profitable early in its lifecycle, it is usually abandoned in favour of another. Speed is central to the attacker’s economics.

5. Why doesn’t transaction monitoring catch this early enough?

Because the first transactions are often deliberately small, legitimate-looking, or promotional in nature. From a payments perspective, they may not cross any thresholds. The real risk sits in who is transacting, not what is being transacted. Without onboarding and early-stage risk controls, transaction monitoring reacts too late.

6. Do progressive limits harm genuine user conversion?

When designed properly, they usually do not. Most real users do not need full functionality immediately and are comfortable with sensible early limits. Progressive limits reduce exposure while allowing behaviour to establish trust organically. In many cases, they reduce false positives by avoiding premature blocking.

7. Is it realistic to aim for zero fake accounts?

No. At scale, some level of fake account creation is inevitable. The objective is not elimination, but economics. When fake accounts become expensive to create, slow to monetise, and difficult to scale, attackers move on. Effective controls make fraud unattractive rather than impossible.

8. Where should responsibility for onboarding fraud sit inside an organisation?

It should be shared, but clearly owned. Onboarding fraud sits at the intersection of growth, risk, and payments. Teams that isolate it within payments or fraud functions tend to respond too late. The most effective organisations treat onboarding as a controlled gateway, with shared metrics and aligned incentives across functions.